Datatypes

Make searching easier by processing data into discrete events and defining fields.

Datatypes are sets of rules that Cribl Search follows to break data (bytes) from Datasets

into discrete events, timestamp them, and parse them as needed. This helps with categorizing events and makes searching

easier via addressing through a datatype field.

Cribl Search ships with a number of built-in Datatypes, and you can author, test, and validate custom Datatype rules using the Cribl Search UI.

With appropriate Permissions, you can also export, share, and import Datatypes in Packs.

Using Datatypes

To search a Dataset with a specific rule’s results, use the Datatype field. For example,

dataset=myDataset Datatype=aws_vpcflow. Note that the value is not the ID of a Datatype - rather, it is the

Datatype of the rule.

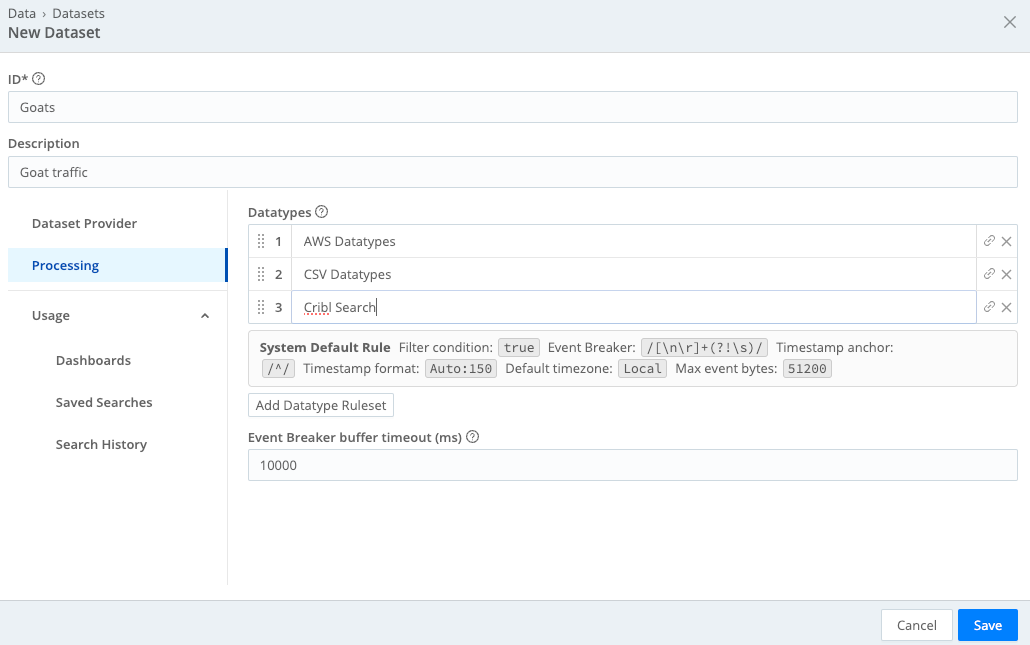

A Dataset can be associated with one or more Datatype rulesets. rulesets are evaluated top-down, and they consist of an ordered list of rules that are also evaluated top-down.

Cribl Search will attempt to match the data against all the ordered rules, and it will process the search results using the first rule that matches.

When you write data to an object store for later analysis by Cribl Search, ensure that events are homogeneously formatted within each file. This consistent structure is necessary in order for Cribl Search to accurately apply its Event Breakers and Parsers. Cribl Search determines a file’s Datatype by evaluating the file’s initial data against Datatype filter conditions, and then applies the first-matched Datatype to the remainder of the file.

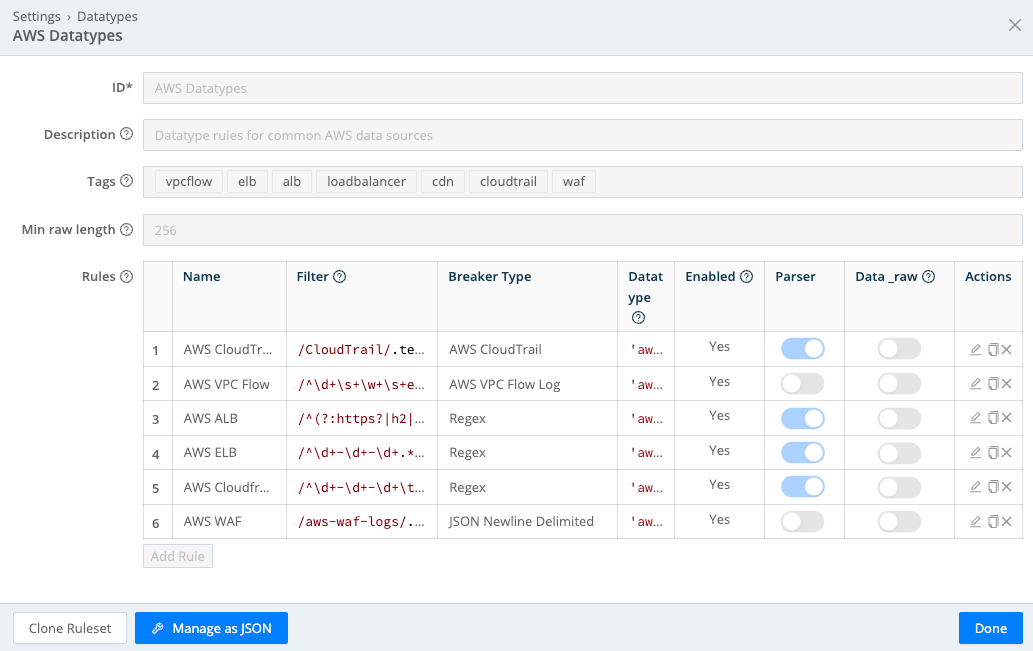

Here’s an example of a Datatype ruleset called “AWS Datatypes”:

Datatyping is applied before search terms - meaning that if a field is added, for example through parsing, you can reference it in the query, such as in filter or projected terms.

Here’s an example of a Dataset configured with multiple Datatype rulesets:

Use wildcards * to specify multiple Datatype rulesets with similar names. Matching is case sensitive. For example,

A*matches Datatype IDs starting with the letter A.*Amatches Datatype IDs ending with the letter A.*A*matches Datatype IDs with the letter A.

Order of Operations

The process of datatyping is as follows:

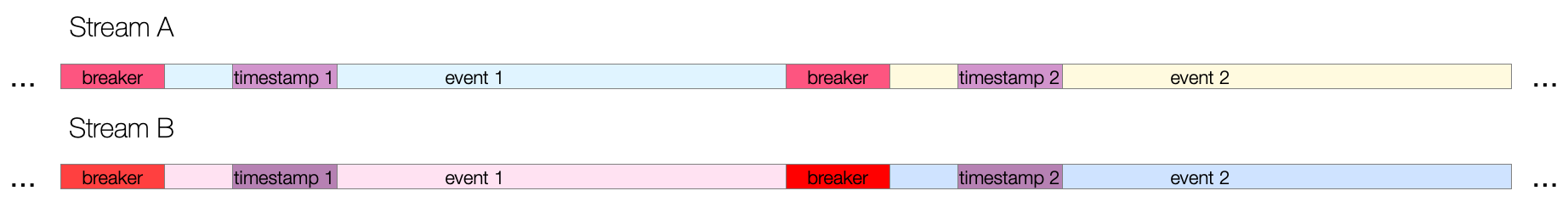

- Event Breaking - Breaks raw bytes into discrete events.

- Timestamping - Assigns timestamps to events.

- Parsing - Parses fields from events.

- Add Fields - Adds additional fields.

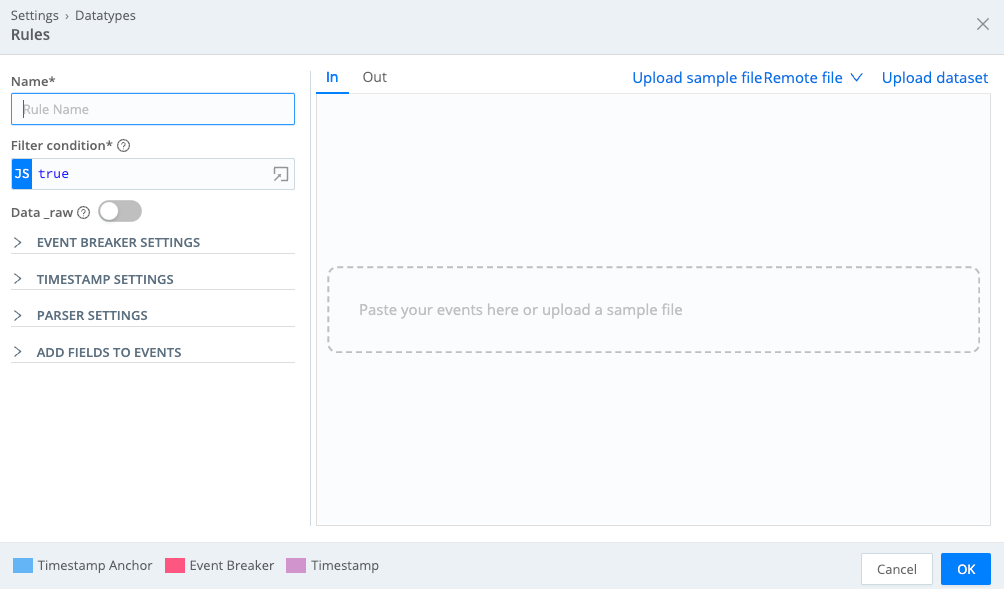

Create a rule

On the Datatypes page, select an existing Datatype ruleset, or select Add Datatype to create a new ruleset. Within a Datatype configuration modal, select Add Rule to open the Rules modal shown below:

Each rule includes the following components.

Filter Condition

As data is processed, a rule’s filter expression (JavaScript) is applied. If the expression evaluates to true, the

rule configurations are engaged on the Dataset. Else, the next rule down the line is evaluated.

Event Breaker Settings

Event Breakers configure how Cribl Search converts data into discrete events. Cribl Search provides several different formats for Event Breakers. See below for specific information on different Event Breaker Types.

Timestamp Settings

After data is processed into events, Cribl Search will attempt timestamping. First, a timestamp anchor will be located inside the event. Next, starting there, the engine will try to do one of the following:

- Scan up to a configurable depth into the event and autotimestamp, or

- Timestamp using a manually supplied

strptimeformat, or - Timestamp the event with the current time.

The closer an anchor is to the timestamp pattern, the better the performance and accuracy - especially if multiple timestamps exist within an event. For the manually supplied option, the anchor must lead the engine right before the timestamp pattern begins.

Parser Settings

The Parser associated with a rule can be used to extract fields out of events that you can reference in searches. Each field in the parsed event contains a key-value pair, where the field name is the key.

Parsers are very similar to the Parser function that can be configured in Cribl Stream pipelines.

You can configure a new Parser or select one from the library to configure. Based on the Type selected, you’ll have the following configurations:

- Type: Select the format of your data,

CSV,Extended Log File Format,Common Log Format,Key=Value Pairs,JSON Object,Delimited values,Regular Expression, orGrok. - Library: Select a Parser defined in the library. The library is located at Knowledge > Parsers.

- Source field: Define the field the Parser will use to extract from. Defaults to

_raw. - Destination field: Provide a name for the field to which the extracted data will be assigned.

- List of fields: The fields to be extracted in the desired order. If you don’t specify any fields, the Parser will generate them automatically.

- Fields to keep: The fields to keep. Supports wildcards

*. Takes precedence over Fields to remove. - Fields to remove: The fields to remove. Supports wildcards

*. You cannot remove fields that match Fields to keep. - Fields filter expression: Expression evaluated against

{index, name, value}context. Returntruthyto keep a field, orfalsyto remove it.

The following configuration lists are categorized by Type:

Key=Value Pairs

- Clean fields: Whether to clean field names by replacing non-alphanumeric characters

[a-zA-Z0-9]with an underscore_.

Advanced Settings

- Allowed key characters: A list of characters that can appear in a key name, even though they’re normally separator or control characters.

- Allowed value characters: A list of characters that can appear in a value name, even though they’re normally separator or control characters.

Delimited Values

- Delimiter: Delimiter character to use to split values.

- Quote character: Character used to quote values. Required if values contain the delimiter character.

- Escape character: Character used to escape characters within a value.

- Null value: String value that should be treated as null or undefined.

Regular Expression

- Regex: Regex literal with named capturing groups, for example,

(?<foo>bar). Or with_NAME_and_VALUE_capturing groups, for example,(?<_NAME_0>[^ =]+)=(?<_VALUE_0>[^,]+). - Additional regex: Add another regular expression to match against other data.

- Max exec: The maximum number of times to apply the regex to the source field when the global flag is set, or when using named capturing groups.

- Field name format expression: JavaScript expression to format field names when

_NAME_nand_VALUE_ncapturing groups are used. The original field name is in the global variablename. For example, to appendXXto all field names:${name}_XX(backticks are literal). If empty, names will be sanitized using this regex:/^[_0-9]+|[^a-zA-Z0-9_]+/g. You can access other field values via__e.<fieldName>. - Overwrite existing fields: Overwrite existing event fields with extracted values. If set to No, existing fields will be converted to an array.

Grok

- Pattern: Grok pattern to extract fields. Syntax supported:

%{PATTERN_NAME:FIELD_NAME}. - Additional Grok patterns: Add another pattern to match other data.

Add Fields to Events

After events have been timestamped, one or more fields can be added here as key-value pairs. In each field’s

Value Expression, you can fully evaluate the field value using JavaScript expressions. Each rule should be

configured to add one field named datatype and its corresponding value. This way, it will be possible to determine

which Datatype each event belongs to by looking at that field.

Event Breaker Types

Several types of Event Breakers can be applied to data:

For details about who can access and modify these resources, see Search Member Permissions.

Regex Event Breaker

The Regex breaker uses regular expressions to find breaking points in data.

Breaking will occur at the beginning of the match, and the matched content will be consumed/thrown away. If necessary,

you can use a positive lookahead regex to keep the content - for example: (?=pattern)

Capturing groups are not allowed to be used anywhere in the Event Breaker pattern, as they will further break the data - which is often undesirable. Breaking will also occur if Max Event Bytes has been reached.

The highest Max Event Bytes value that you can set is about 128 MB (

134217728bytes). Events exceeding the maximum will be split into separate events, but left unbroken. Cribl Search will set these events’__isBrokeninternal field tofalse.

Example

With the following configurations, let’s see how this sample data is datatyped:

- Event Breaker: -

[\n\r]+ - Parser -

AWS VPC Flow Logsfrom the Library. - Add Field -

"datatype": "aws_vpcflow"

--input--

2 123456789012 eni-0b2fc5457066bc156 10.0.0.164 54.239.152.25 41050 443 6 8 1297 1679339697 1679340000 ACCEPT OK

2 123456789013 eni-0b2fc5457066bc157 10.0.0.164 54.239.152.25 41050 443 6 8 1297 1679339698 1679340000 REJECT OK

--- output event 1 ---

{

"_raw": "2 123456789012 eni-0b2fc5457066bc156 10.0.0.164 54.239.152.25 41050 443 6 8 1297 1679339697 1679340000 ACCEPT OK",

"_time": 1679339697,

"datatype": "aws_vpcflow",

"version": "2",

"account_id": "123456789012",

"interface_id": "eni-0b2fc5457066bc156",

"srcaddr": "10.0.0.164",

"dstaddr": "54.239.152.25",

"srcport": "41050",

"dstport": "443",

"protocol": "6",

"packets": "8",

"bytes": "1297",

"start": "1679339697",

"end": "1679340000",

"action": "ACCEPT",

"log_status": "OK",

"dataset": "s3_vpcflowlogs",

}

--- output event 2 ---

{

"_raw": "2 123456789013 eni-0b2fc5457066bc157 10.0.0.164 54.239.152.25 41050 443 6 8 1297 1679339698 1679340000 REJECT OK",

"_time": 1679339698,

"datatype": "aws_vpcflow",

"version": "2",

"account_id": "123456789013",

"interface_id": "eni-0b2fc5457066bc156",

"srcaddr": "10.0.0.164",

"dstaddr": "54.239.152.25",

"srcport": "41050",

"dstport": "443",

"protocol": "6",

"packets": "8",

"bytes": "1297",

"start": "1679339698",

"end": "1679340000",

"action": "REJECT",

"log_status": "OK",

"dataset": "s3_vpcflowlogs",

}JSON New Line Delimited Event Breaker

You can use the JSON New Line Delimited breaker to break and extract fields in newline-delimited JSON data.

Example

Using default values, let’s see how this sample data breaks up:

--- input ---

{"time":"2020-05-25T18:00:54.201Z","cid":"w1","channel":"clustercomm","level":"info","message":"metric sender","total":720,"dropped":0}

{"time":"2020-05-25T18:00:54.246Z","cid":"w0","channel":"clustercomm","level":"info","message":"metric sender","total":720,"dropped":0}

--- output event 1 ---

{

"_raw": "{\"time\":\"2020-05-25T18:00:54.201Z\",\"cid\":\"w1\",\"channel\":\"clustercomm\",\"level\":\"info\",\"message\":\"metric sender\",\"total\":720,\"dropped\":0}",

"time": "2020-05-25T18:00:54.201Z",

"cid": "w1",

"channel": "clustercomm",

"level": "info",

"message": "metric sender",

"total": 720,

"dropped": 0,

"_time": 1590429654.201,

}

--- output event 2 ---

{

"_raw": "{\"time\":\"2020-05-25T18:00:54.246Z\",\"cid\":\"w0\",\"channel\":\"clustercomm\",\"level\":\"info\",\"message\":\"metric sender\",\"total\":720,\"dropped\":0}",

"time": "2020-05-25T18:00:54.246Z",

"cid": "w0",

"channel": "clustercomm",

"level": "info",

"message": "metric sender",

"total": 720,

"dropped": 0,

"_time": 1590429654.246,

}JSON Array Event Breaker

You can use the JSON Array breaker to extract events from an array in a JSON document (for example, an Amazon CloudTrail file).

- Array Field: Optional path to array in a JSON event with records to extract. For example,

Records. - Timestamp Field: Optional path to timestamp field in extracted events. For example,

eventTimeorlevel1.level2.eventTime. - JSON Extract Fields: Enable this slider to auto-extract fields from JSON events. If disabled, only

_rawandtimewill be defined on extracted events. - Timestamp Format: If JSON Extract Fields is set to No, you must set this to Autotimestamp or Current Time. If JSON Extract Fields is set to Yes, you can select any option here.

Example

Using the values above, let’s see how this sample data breaks up:

--- input ---

{"Records":[{"eventVersion":"1.05","eventTime":"2020-04-08T01:35:55Z","eventSource":"ec2.amazonaws.com","eventName":"DescribeVolumes", "more_fields":"..."},

{"eventVersion":"1.05","eventTime":"2020-04-08T01:35:56Z","eventSource":"ec2.amazonaws.com","eventName":"DescribeInstanceAttribute", "more_fields":"..."}]}

--- output event 1 ---

{

"_raw": "{\"eventVersion\":\"1.05\",\"eventTime\":\"2020-04-08T01:35:55Z\",\"eventSource\":\"ec2.amazonaws.com\",\"eventName\":\"DescribeVolumes\", \"more_fields\":\"...\"}",

"_time": 1586309755,

"cribl_breaker": "j-array"

}

--- output event 2 ---

{

"_raw": "{\"eventVersion\":\"1.05\",\"eventTime\":\"2020-04-08T01:35:56Z\",\"eventSource\":\"ec2.amazonaws.com\",\"eventName\":\"DescribeInstanceAttribute\", \"more_fields\":\"...\"}",

"_time": 1586309756,

"cribl_breaker": "j-array"

}File Header Event Breaker

You can use the File Header breaker to break files with headers, such as IIS or Bro logs. This type of breaker relies on a header section that lists field names. The header section is typically present at the top of the file, and can be single-line or greater.

After the file has been broken into events, fields will also be extracted, as follows:

- Header Line: Regex matching a file header line. For example,

^#. - Field Delimiter: Field delimiter regex. For example,

\s+. - Field Regex: Regex with one capturing group, capturing all the fields to be broken by field delimiter. For

example,

^ields[:]?\s+(.*) - Null Values: Representation of a null value. Null fields are not added to events.

- Clean Fields: Whether to clean up field names by replacing non-alphanumeric characters

[a-zA-Z0-9]with an underscore_.

Example

Using the values above, let’s see how this sample file breaks up:

--- input ---

#fields ts uid id.orig_h id.orig_p id.resp_h id.resp_p proto

#types time string addr port addr port enum

1331904608.080000 - 192.168.204.59 137 192.168.204.255 137 udp

1331904609.190000 - 192.168.202.83 48516 192.168.207.4 53 udp

--- output event 1 ---

{

"_raw": "1331904608.080000 - 192.168.204.59 137 192.168.204.255 137 udp",

"ts": "1331904608.080000",

"id_orig_h": "192.168.204.59",

"id_orig_p": "137",

"id_resp_h": "192.168.204.255",

"id_resp_p": "137",

"proto": "udp",

"_time": 1331904608.08

}

--- output event 2 ---

{

"_raw": "1331904609.190000 - 192.168.202.83 48516 192.168.207.4 53 udp",

"ts": "1331904609.190000",

"id_orig_h": "192.168.202.83",

"id_orig_p": "48516",

"id_resp_h": "192.168.207.4",

"id_resp_p": "53",

"proto": "udp",

"_time": 1331904609.19

}Timestamp Event Breaker

You can use the Timestamp breaker to break events at the beginning of any line in which Cribl Search finds a timestamp. This type enables breaking on lines whose timestamp pattern is not known ahead of time.

Example

Using default values, let’s see how this sample data breaks up:

--- input ---

{"level":"debug","ts":"2021-02-02T10:38:46.365Z","caller":"sdk/sync.go:42","msg":"Handle ENIConfig Add/Update: us-west-2a, [sg-426fdac8e5c22542], subnet-42658cf14a98b42"}

{"level":"debug","ts":"2021-02-02T10:38:56.365Z","caller":"sdk/sync.go:42","msg":"Handle ENIConfig Add/Update: us-west-2a, [sg-426fdac8e5c22542], subnet-42658cf14a98b42"}

--- output event 1 ---

{

"_raw": "{\"level\":\"debug\",\"ts\":\"2021-02-02T10:38:46.365Z\",\"caller\":\"sdk/sync.go:42\",\"msg\":\"Handle ENIConfig Add/Update: us-west-2a, [sg-426fdac8e5c22542], subnet-42658cf14a98b42\"}",

"_time": 1612262326.365

}

--- output event 2 ---

{

"_raw": "{\"level\":\"debug\",\"ts\":\"2021-02-02T10:38:56.365Z\",\"caller\":\"sdk/sync.go:42\",\"msg\":\"Handle ENIConfig Add/Update: us-west-2a, [sg-426fdac8e5c22542], subnet-42658cf14a98b42\"}",

"_time": 1612262336.365

}CSV Event Breaker

The CSV breaker extracts fields in CSV data that include a header line. Selecting this type exposes these extra fields:

- Delimiter: Delimiter character to use to split values. Defaults to:

,. - Quote Char: Character used to quote literal values. Defaults to:

". - Escape Char: Character used to escape the quote character in field values. Defaults to:

".

Example

Using default values, let’s see how this sample data breaks up:

--- input ---

time,host,source,model,serial,bytes_in,bytes_out,cpu

1611768713,"myHost1","anet","cisco","ASN4204269",11430,43322,0.78

1611768714,"myHost2","anet","cisco","ASN420423",345062,143433,0.28

--- output event 1 ---

{

"_raw": "\"1611768713\",\"myHost1\",\"anet\",\"cisco\",\"ASN4204269\",\"11430\",\"43322\",\"0.78\"",

"time": "1611768713",

"host": "myHost1",

"source": "anet",

"model": "cisco",

"serial": "ASN4204269",

"bytes_in": "11430",

"bytes_out": "43322",

"cpu": "0.78",

"_time": 1611768713

}

--- output event 2 ---

{

"_raw": "\"1611768714\",\"myHost2\",\"anet\",\"cisco\",\"ASN420423\",\"345062\",\"143433\",\"0.28\"",

"time": "1611768714",

"host": "myHost2",

"source": "anet",

"model": "cisco",

"serial": "ASN420423",

"bytes_in": "345062",

"bytes_out": "143433",

"cpu": "0.28",

"_time": 1611768714

}With Type: CSV selected, an Event Breaker will properly add quotes around all values, regardless of their initial state.

AWS CloudTrail Event Breaker

Use the AWS CloudTrail breaker to break and parse AWS CloudTrail events much faster.

See the example of input and output events below.

--- input ---

{"Records":[{"eventVersion":"1.0","userIdentity":{"type":"IAMUser","principalId":"EX_PRINCIPAL_ID","arn":"arn:aws:iam::123456789012:user/Alice","accessKeyId":"EXAMPLE_KEY_ID","accountId":"123456789012","userName":"Alice"},"eventTime":"2014-03-06T21:22:54Z","eventSource":"ec2.amazonaws.com","eventName":"StartInstances","awsRegion":"us-east-2","sourceIPAddress":"205.251.233.176","userAgent":"ec2-api-tools 1.6.12.2","requestParameters":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2"}]}},"responseElements":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2","currentState":{"code":0,"name":"pending"},"previousState":{"code":80,"name":"stopped"}}]}}},{"eventVersion":"1.0","userIdentity":{"type":"IAMUser","principalId":"EX_PRINCIPAL_ID","arn":"arn:aws:iam::123456789012:user/Rabbit","accessKeyId":"EXAMPLE_KEY_ID","accountId":"123456789012","userName":"Rabbit"},"eventTime":"2014-03-06T21:22:54Z","eventSource":"ec2.amazonaws.com","eventName":"StartInstances","awsRegion":"us-east-2","sourceIPAddress":"205.251.233.176","userAgent":"ec2-api-tools 1.6.12.2","requestParameters":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2"}]}},"responseElements":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2","currentState":{"code":0,"name":"pending"},"previousState":{"code":80,"name":"stopped"}}]}}},{"eventVersion":"1.0","userIdentity":{"type":"IAMUser","principalId":"EX_PRINCIPAL_ID","arn":"arn:aws:iam::123456789012:user/Hatter","accessKeyId":"EXAMPLE_KEY_ID","accountId":"123456789012","userName":"Hatter"},"eventTime":"2014-03-06T21:22:54Z","eventSource":"ec2.amazonaws.com","eventName":"StartInstances","awsRegion":"us-east-2","sourceIPAddress":"205.251.233.176","userAgent":"ec2-api-tools 1.6.12.2","requestParameters":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2"}]}},"responseElements":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2","currentState":{"code":0,"name":"pending"},"previousState":{"code":80,"name":"stopped"}}]}}}]}

--- output event 1 ---

{

"_raw": "{\"eventVersion\":\"1.0\",\"userIdentity\":{\"type\":\"IAMUser\",\"principalId\":\"EX_PRINCIPAL_ID\",\"arn\":\"arn:aws:iam::123456789012:user/Alice\",\"accessKeyId\":\"EXAMPLE_KEY_ID\",\"accountId\":\"123456789012\",\"userName\":\"Alice\"},\"eventTime\":\"2014-03-06T21:22:54Z\",\"eventSource\":\"ec2.amazonaws.com\",\"eventName\":\"StartInstances\",\"awsRegion\":\"us-east-2\",\"sourceIPAddress\":\"205.251.233.176\",\"userAgent\":\"ec2-api-tools 1.6.12.2\",\"requestParameters\":{\"instancesSet\":{\"items\":[{\"instanceId\":\"i-ebeaf9e2\"}]}},\"responseElements\":{\"instancesSet\":{\"items\":[{\"instanceId\":\"i-ebeaf9e2\",\"currentState\":{\"code\":0,\"name\":\"pending\"},\"previousState\":{\"code\":80,\"name\":\"stopped\"}}]}}}",

"_time": 1711112256.322

}

--- output event 2 ---

{

"_raw": "{\"eventVersion\":\"1.0\",\"userIdentity\":{\"type\":\"IAMUser\",\"principalId\":\"EX_PRINCIPAL_ID\",\"arn\":\"arn:aws:iam::123456789012:user/Rabbit\",\"accessKeyId\":\"EXAMPLE_KEY_ID\",\"accountId\":\"123456789012\",\"userName\":\"Rabbit\"},\"eventTime\":\"2014-03-06T21:22:54Z\",\"eventSource\":\"ec2.amazonaws.com\",\"eventName\":\"StartInstances\",\"awsRegion\":\"us-east-2\",\"sourceIPAddress\":\"205.251.233.176\",\"userAgent\":\"ec2-api-tools 1.6.12.2\",\"requestParameters\":{\"instancesSet\":{\"items\":[{\"instanceId\":\"i-ebeaf9e2\"}]}},\"responseElements\":{\"instancesSet\":{\"items\":[{\"instanceId\":\"i-ebeaf9e2\",\"currentState\":{\"code\":0,\"name\":\"pending\"},\"previousState\":{\"code\":80,\"name\":\"stopped\"}}]}}}",

"_time": 1711112355.478

}

--- output event 3 ---

{

"_raw": "{\"eventVersion\":\"1.0\",\"userIdentity\":{\"type\":\"IAMUser\",\"principalId\":\"EX_PRINCIPAL_ID\",\"arn\":\"arn:aws:iam::123456789012:user/Hatter\",\"accessKeyId\":\"EXAMPLE_KEY_ID\",\"accountId\":\"123456789012\",\"userName\":\"Hatter\"},\"eventTime\":\"2014-03-06T21:22:54Z\",\"eventSource\":\"ec2.amazonaws.com\",\"eventName\":\"StartInstances\",\"awsRegion\":\"us-east-2\",\"sourceIPAddress\":\"205.251.233.176\",\"userAgent\":\"ec2-api-tools 1.6.12.2\",\"requestParameters\":{\"instancesSet\":{\"items\":[{\"instanceId\":\"i-ebeaf9e2\"}]}},\"responseElements\":{\"instancesSet\":{\"items\":[{\"instanceId\":\"i-ebeaf9e2\",\"currentState\":{\"code\":0,\"name\":\"pending\"},\"previousState\":{\"code\":80,\"name\":\"stopped\"}}]}}}",

"_time": 1711112355.478

}AWS VPC Flow Log Event Breaker

Use the AWS VPC Flow Log breaker to break and parse AWS VPC Flow Log events as fast as possible.

This breaker works properly with files formatted as follows:

- Each file starts with a header. That header is in the same format as other lines but describes the column names.

- Every line has the same number of columns.

- Each column is separated by the space (

- Each line ends with the newline character (

\n). - A single dash (

-) indicates a null value for the column where it appears.

See the example of input and output events below.

--- input ---

version account_id interface_id srcaddr dstaddr srcport dstport protocol packets bytes start end action log_status

2 123456789014 eni-07e5d76940fbaa5c6 231.234.233.57 83.70.34.149 19856 15726 17 3048 380 1262332800 1262332900 ACCEPT NODATA

2 123456789010 eni-0858304751c757b2e 31.200.171.164 22.109.125.129 58551 456 17 10824 352 1262332801 1262333301 ACCEPT OK

2 123456789013 eni-07e5d76940fbaa5c6 78.128.152.196 67.42.235.136 25164 29139 17 5380 160 1262332802 1262332902 REJECT OK

--- output event 1 ---

{

"_raw": "2 123456789014 eni-07e5d76940fbaa5c6 231.234.233.57 83.70.34.149 19856 15726 17 3048 380 1262332800 1262332900 ACCEPT NODATA",

"version": "2",

"account_id": "123456789014",

"interface_id": "eni-07e5d76940fbaa5c6",

"srcaddr": "231.234.233.57",

"dstaddr": "83.70.34.149",

"srcport": "19856",

"dstport": "15726",

"protocol": "17",

"packets": "3048",

"bytes": "380",

"start": "1262332800",

"end": "1262332900",

"action": "ACCEPT",

"log_status": "NODATA",

"_time": 1611768713

}

--- output event 2 ---

{

"_raw": "2 123456789010 eni-0858304751c757b2e 31.200.171.164 22.109.125.129 58551 456 17 10824 352 1262332801 1262333301 ACCEPT OK",

"version": "2",

"account_id": "123456789010",

"interface_id": "eni-0858304751c757b2e",

"srcaddr": "31.200.171.164",

"dstaddr": "22.109.125.129",

"srcport": "58551",

"dstport": "456",

"protocol": "17",

"packets": "10824",

"bytes": "352",

"start": "1262332801",

"end": "1262333301",

"action": "ACCEPT",

"log_status": "OK",

"_time": 1611768714

}

--- output event 3 ---

{

"_raw": "2 123456789013 eni-07e5d76940fbaa5c6 78.128.152.196 67.42.235.136 25164 29139 17 5380 160 1262332802 1262332902 REJECT OK",

"version": "2",

"account_id": "123456789013",

"interface_id": "eni-07e5d76940fbaa5c6",

"srcaddr": "78.128.152.196",

"dstaddr": "67.42.235.136",

"srcport": "25164",

"dstport": "29139",

"protocol": "17",

"packets": "5380",

"bytes": "160",

"start": "1262332802",

"end": "1262332902",

"action": "REJECT",

"log_status": "OK",

"_time": 1611768715

}With Type: AWS VPC Flow Log selected, an Event Breaker will properly add quotes around all values, regardless of their initial state.

Cribl rulesets versus Custom rulesets

Datatype rulesets shipped by Cribl will be listed under the Cribl tag, while the user-built ones will be listed under Custom. Over time, Cribl will ship more patterns, so this distinction allows for both sets to grow independently. In the case of an ID/Name conflict, the Custom pattern takes priority in listings and search.

Exporting and Importing Datatype rulesets

You can export and import Custom (or Cribl) rulesets as JSON files. This facilitates sharing across Cribl.Cloud organizations.

To export a Datatype ruleset:

- Select to open an existing ruleset, or create a new ruleset.

- In the resulting modal, select Manage as JSON to open the JSON editor.

- You can now modify the ruleset directly in JSON, if you choose.

- Select Export, select a destination path, and name the file.

To import any Dataset ruleset that has been exported as a valid JSON file:

- Create a new ruleset.

- In the resulting modal, select Manage as JSON to open the JSON editor.

- Select Import, and choose the file you want.

- Select OK to get back to the New ruleset modal.

- Select Save.

Every Dataset ruleset must have a unique value in its top

idkey. Importing a JSON file with a duplicateidvalue will fail at the final Save step, with a message that the ruleset already exists. You can remedy this by giving the file a uniqueidvalue.

Datatype Rulesets Shipped with Cribl Search

You can use these Datatype rulesets out of the box:

Apache DatatypesAWS DatatypesAzure DatatypesCisco DatatypesCribl Search _raw DataCribl SearchCSV DatatypesMicrosoft Graph API DatatypesMicrosoft O365 DatatypesMicrosoft Windows DatatypesOCSF DatatypesPalo Alto DatatypesSyslog DatatypesZeek Datatypes

Apache Datatypes

Parses Apache web server logs.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | Apache Common | apache_access |

| 2 | Apache Combined | apache_access_combined |

See some examples:

--- input ---

127.0.0.1 - frank [10/Oct/2000:13:55:36 -0700] "GET /apache_pb.gif HTTP/1.0" 200 2326

--- output ---

{

"_raw": "127.0.0.1 - frank [10/Oct/2000:13:55:36 -0700] \"GET /apache_pb.gif HTTP/1.0\" 200 2326",

"_time": 971211336,

"datatype": "apache_access",

"clientip": "127.0.0.1",

"ident": "-",

"user": "frank",

"timestamp": "10/Oct/2000:13:55:36 -0700",

"request": "GET /apache_pb.gif HTTP/1.0",

"status": "200",

"bytes": "2326"

}--- input ---

127.0.0.1 - frank [10/Oct/2000:13:55:36 -0700] "GET /apache_pb.gif HTTP/1.0" 200 2326 "http://www.example.com/start.html" "Mozilla/4.08 [en] (Win98; I ;Nav)"

--- output ---

{

"_raw": "127.0.0.1 - frank [10/Oct/2000:13:55:36 -0700] \"GET /apache_pb.gif HTTP/1.0\" 200 2326 \"http://www.example.com/start.html\" \"Mozilla/4.08 [en] (Win98; I ;Nav)\"",

"_time": 971211336,

"datatype": "apache_access_combined",

"clientip": "127.0.0.1",

"ident": "-",

"user": "frank",

"timestamp": "10/Oct/2000:13:55:36 -0700",

"request": "GET /apache_pb.gif HTTP/1.0",

"status": "200",

"bytes": "2326",

"referer": "http://www.example.com/start.html",

"useragent": "Mozilla/4.08 [en] (Win98; I ;Nav)"

}AWS Datatypes

Parses common AWS log formats, such as VPC Flow, CloudFront, WAF.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | AWS CloudTrail | aws_cloudtrail |

| 2 | AWS VPC Flow | aws_vpcflow |

| 3 | AWS ALB | aws_alb_accesslogs |

| 4 | AWS ELB | aws_elb_accesslogs |

| 5 | AWS Cloudfront Web | aws_cloudfront_accesslogs |

| 6 | AWS WAF | aws_waf |

See some examples:

---input---

{

"eventTime": "2014-03-06T21:22:54Z",

"eventSource": "ec2.amazonaws.com",

"eventName": "StartInstances",

"userIdentity": {

"accountId": "123456789012",

"userName": "Hatter"

}

}

---output---

{

"_raw": "{\"eventTime\":\"2014-03-06T21:22:54Z\",\"eventSource\":\"ec2.amazonaws.com\",\"eventName\":\"StartInstances\",\"userIdentity\":{\"accountId\":\"123456789012\",\"userName\":\"Hatter\"}}",

"_time": 1394140974,

"datatype": "aws_cloudtrail",

"eventTime": "2014-03-06T21:22:54Z",

"eventSource": "ec2.amazonaws.com",

"eventName": "StartInstances",

"userIdentity": {

"accountId": "123456789012",

"userName": "Hatter"

}

}---input---

version account_id interface_id srcaddr dstaddr srcport dstport protocol packets bytes start end action log_status

2 123456789010 eni-0858304751c757b2e 31.200.171.164 22.109.125.129 58551 456 17 10824 352 1262332801 1262333301 ACCEPT OK

---output---

{

"_raw": "2 123456789010 eni-0858304751c757b2e 31.200.171.164 22.109.125.129 58551 456 17 10824 352 1262332801 1262333301 ACCEPT OK",

"_time": 1262332801,

"datatype": "aws_vpcflow",

"version": "2",

"account_id": "123456789010",

"interface_id": "eni-0858304751c757b2e",

"srcaddr": "31.200.171.164",

"dstaddr": "22.109.125.129",

"srcport": "58551",

"dstport": "456",

"protocol": "17",

"packets": "10824",

"bytes": "352",

"start": "1262332801",

"end": "1262333301",

"action": "ACCEPT",

"log_status": "OK"

}---input---

http 2023-05-15T12:00:00.000000Z app/loadbalancer 192.0.2.1:12345 198.51.100.1:443 0.001 0.002 0.000 200 200 0 57 "GET https://example.com:443/path/to/resource?foo=bar HTTP/1.1" "Mozilla/5.0 (compatible; ExampleBot/1.0)" - - arn:aws:elasticloadbalancing:us-west-2:123456789012:targetgroup/example-target/abcdef1234567890 "Root=1-5f84c7a9-1d2c3e4f5a6b7c8d9e0f1234" "example.com" "Forward" "-"

---output---

{

"_raw": "http 2023-05-15T12:00:00.000000Z app/loadbalancer 192.0.2.1:12345 198.51.100.1:443 0.001 0.002 0.000 200 200 0 57 \"GET https://example.com:443/path/to/resource?foo=bar HTTP/1.1\" \"Mozilla/5.0 (compatible; ExampleBot/1.0)\" - - arn:aws:elasticloadbalancing:us-west-2:123456789012:targetgroup/example-target/abcdef1234567890 \"Root=1-5f84c7a9-1d2c3e4f5a6b7c8d9e0f1234\" \"example.com\" \"Forward\" \"-\"",

"_time": 1684152000,

"datatype": "aws_alb_accesslogs",

"type": "http",

"timestamp": "2023-05-15T12:00:00.000000Z",

"elb": "app/loadbalancer",

"client_port": "192.0.2.1:12345",

"target_port": "198.51.100.1:443",

"request_processing_time": "0.001",

"target_processing_time": "0.002",

"response_processing_time": "0.000",

"elb_status_code": "200",

"target_status_code": "200",

"received_bytes": "0",

"sent_bytes": "57",

"request": "GET https://example.com:443/path/to/resource?foo=bar HTTP/1.1",

"user_agent": "Mozilla/5.0 (compatible; ExampleBot/1.0)",

"ssl_cipher": "-",

"ssl_protocol": "-",

"target_group_arn": "arn:aws:elasticloadbalancing:us-west-2:123456789012:targetgroup/example-target/abcdef1234567890",

"trace_id": "Root=1-5f84c7a9-1d2c3e4f5a6b7c8d9e0f1234",

"domain_name": "example.com",

"chosen_cert_arn": "Forward",

"matched_rule_priority": "-"

}---input---

2023-05-15T12:00:00.000000Z my-loadbalancer 192.168.131.39:2817 10.0.0.1:80 0.000073 0.001048 0.000057 200 200 0 29 "GET http://www.example.com:80/ HTTP/1.1" "Curl/7.24.0 (x86_64-redhat-linux-gnu) libcurl/7.24.0 NSS/3.13.5.0 zlib/1.2.5 libidn/1.18 libssh2/1.2.2" - -

---output---

{

"_raw": "2023-05-15T12:00:00.000000Z my-loadbalancer 192.168.131.39:2817 10.0.0.1:80 0.000073 0.001048 0.000057 200 200 0 29 \"GET http://www.example.com:80/ HTTP/1.1\" \"Curl/7.24.0 (x86_64-redhat-linux-gnu) libcurl/7.24.0 NSS/3.13.5.0 zlib/1.2.5 libidn/1.18 libssh2/1.2.2\" - -",

"_time": 1684152000,

"datatype": "aws_elb_accesslogs",

"timestamp": "2023-05-15T12:00:00.000000Z",

"elb": "my-loadbalancer",

"client_port": "192.168.131.39:2817",

"backend_port": "10.0.0.1:80",

"request_processing_time": "0.000073",

"backend_processing_time": "0.001048",

"response_processing_time": "0.000057",

"elb_status_code": "200",

"backend_status_code": "200",

"received_bytes": "0",

"sent_bytes": "29",

"request": "GET http://www.example.com:80/ HTTP/1.1",

"user_agent": "Curl/7.24.0 (x86_64-redhat-linux-gnu) libcurl/7.24.0 NSS/3.13.5.0 zlib/1.2.5 libidn/1.18 libssh2/1.2.2",

"ssl_cipher": "-",

"ssl_protocol": "-"

}---input---

2019-12-04 21:02:31 LAX1 392 192.0.2.100 GET d111111abcdef8.cloudfront.net /index.html 200 - Mozilla/5.0%20(Windows%20NT%2010.0;%20Win64;%20x64)%20AppleWebKit/537.36%20(KHTML,%20like%20Gecko)%20Chrome/78.0.3904.108%20Safari/537.36 - - Hit SOX4xwn4XV6Q4rgb7XiVGwqems0SDmkq-khqfcC82eB0Oa9b7g== d111111abcdef8.cloudfront.net https 23 0.001 - TLSv1.2 ECDHE-RSA-AES128-GCM-SHA256 Hit HTTP/2.0 - -

---output---

{

"_raw": "2019-12-04\t21:02:31\tLAX1\t392\t192.0.2.100\tGET\td111111abcdef8.cloudfront.net\t/index.html\t200\t-\tMozilla/5.0%20(Windows%20NT%2010.0;%20Win64;%20x64)%20AppleWebKit/537.36%20(KHTML,%20like%20Gecko)%20Chrome/78.0.3904.108%20Safari/537.36\t-\t-\tHit\tSOX4xwn4XV6Q4rgb7XiVGwqems0SDmkq-khqfcC82eB0Oa9b7g==\td111111abcdef8.cloudfront.net\thttps\t23\t0.001\t-\tTLSv1.2\tECDHE-RSA-AES128-GCM-SHA256\tHit\tHTTP/2.0\t-\t-",

"_time": 1575493351,

"datatype": "aws_cloudfront_accesslogs",

"date": "2019-12-04",

"time": "21:02:31",

"x_edge_location": "LAX1",

"sc_bytes": "392",

"c_ip": "192.0.2.100",

"cs_method": "GET",

"cs_host": "d111111abcdef8.cloudfront.net",

"cs_uri_stem": "/index.html",

"sc_status": "200",

"cs_referer": "-",

"cs_user_agent": "Mozilla/5.0%20(Windows%20NT%2010.0;%20Win64;%20x64)%20AppleWebKit/537.36%20(KHTML,%20like%20Gecko)%20Chrome/78.0.3904.108%20Safari/537.36",

"cs_uri_query": "-",

"cs_cookie": "-",

"x_edge_result_type": "Hit",

"x_edge_request_id": "SOX4xwn4XV6Q4rgb7XiVGwqems0SDmkq-khqfcC82eB0Oa9b7g==",

"x_host_header": "d111111abcdef8.cloudfront.net",

"cs_protocol": "https",

"cs_bytes": "23",

"time_taken": "0.001",

"x_forwarded_for": "-",

"ssl_protocol": "TLSv1.2",

"ssl_cipher": "ECDHE-RSA-AES128-GCM-SHA256",

"x_edge_response_result_type": "Hit",

"cs_protocol_version": "HTTP/2.0",

"fle_status": "-",

"fle_encrypted_fields": "-"

}---input---

{

"timestamp": 1575500000000,

"formatVersion": 1,

"webaclId": "arn:aws:wafv2:us-east-1:123456789012:regional/webacl/ExampleWebACL/12345678-1234-1234-1234-123456789012",

"terminatingRuleId": "Default_Action",

"terminatingRuleType": "REGULAR",

"action": "ALLOW",

"httpSourceName": "ALB",

"httpSourceId": "123456789012-app/my-load-balancer/50dc6c495c0c9188",

"httpRequest": {

"clientIp": "192.0.2.1",

"country": "US",

"uri": "/",

"httpMethod": "GET",

"requestId": "12345678-1234-1234-1234-123456789012"

}

}

---output---

{

"_raw": "{\"timestamp\":1575500000000,\"formatVersion\":1,\"webaclId\":\"arn:aws:wafv2:us-east-1:123456789012:regional/webacl/ExampleWebACL/12345678-1234-1234-1234-123456789012\",\"terminatingRuleId\":\"Default_Action\",\"terminatingRuleType\":\"REGULAR\",\"action\":\"ALLOW\",\"httpSourceName\":\"ALB\",\"httpSourceId\":\"123456789012-app/my-load-balancer/50dc6c495c0c9188\",\"httpRequest\":{\"clientIp\":\"192.0.2.1\",\"country\":\"US\",\"uri\":\"/\",\"httpMethod\":\"GET\",\"requestId\":\"12345678-1234-1234-1234-123456789012\"}}",

"_time": 1575500000,

"datatype": "aws_waf",

"timestamp": 1575500000000,

"formatVersion": 1,

"webaclId": "arn:aws:wafv2:us-east-1:123456789012:regional/webacl/ExampleWebACL/12345678-1234-1234-1234-123456789012",

"terminatingRuleId": "Default_Action",

"terminatingRuleType": "REGULAR",

"action": "ALLOW",

"httpSourceName": "ALB",

"httpSourceId": "123456789012-app/my-load-balancer/50dc6c495c0c9188",

"httpRequest": {

"clientIp": "192.0.2.1",

"country": "US",

"uri": "/",

"httpMethod": "GET",

"requestId": "12345678-1234-1234-1234-123456789012"

}

}Azure Datatypes

Parses Azure-specific logs, such as Network Security Group flow logs.

This Datatype ruleset consists of a single rule:

| Rule name | Value of the datatype field added to the event |

|---|---|

Network Security Group | azure_networksecuritygroup |

Here’s an example of how this Datatype processes an NSG flow log event:

{

"records": [

{

"time": "2023-05-15T12:00:00.0000000Z",

"systemId": "00000000-0000-0000-0000-000000000000",

"category": "NetworkSecurityGroupFlowEvent",

"resourceId": "/SUBSCRIPTIONS/12345678-1234-1234-1234-123456789012/RESOURCEGROUPS/MY-RESOURCE-GROUP/PROVIDERS/MICROSOFT.NETWORK/NETWORKSECURITYGROUPS/MY-NSG",

"operationName": "NetworkSecurityGroupFlowEvents",

"properties": {

"Version": 2,

"flows": [

{

"rule": "UserRule_Allow-HTTPS-Inbound",

"flows": [

{

"mac": "000D3A146A48",

"flowTuples": ["10.0.0.4,10.0.0.5,4096,443,T,O,A,1623840360,1623840420,2,200"]

}

]

}

]

}

}

]

}{

"_raw": "{\"records\":[{\"time\":\"2023-05-15T12:00:00.0000000Z\",\"systemId\":\"00000000-0000-0000-0000-000000000000\",\"category\":\"NetworkSecurityGroupFlowEvent\",\"resourceId\":\"/SUBSCRIPTIONS/12345678-1234-1234-1234-123456789012/RESOURCEGROUPS/MY-RESOURCE-GROUP/PROVIDERS/MICROSOFT.NETWORK/NETWORKSECURITYGROUPS/MY-NSG\",\"operationName\":\"NetworkSecurityGroupFlowEvents\",\"properties\":{\"Version\":2,\"flows\":[{\"rule\":\"UserRule_Allow-HTTPS-Inbound\",\"flows\":[{\"mac\":\"000D3A146A48\",\"flowTuples\":[\"10.0.0.4,10.0.0.5,4096,443,T,O,A,1623840360,1623840420,2,200\"]}]}]}}]}",

"_time": 1684152000,

"datatype": "azure_networksecuritygroup",

"time": "2023-05-15T12:00:00.0000000Z",

"systemId": "00000000-0000-0000-0000-000000000000",

"category": "NetworkSecurityGroupFlowEvent",

"resourceId": "/SUBSCRIPTIONS/12345678-1234-1234-1234-123456789012/RESOURCEGROUPS/MY-RESOURCE-GROUP/PROVIDERS/MICROSOFT.NETWORK/NETWORKSECURITYGROUPS/MY-NSG",

"operationName": "NetworkSecurityGroupFlowEvents",

"properties": {

"Version": 2,

"flows": [

{

"rule": "UserRule_Allow-HTTPS-Inbound",

"flows": [

{

"mac": "000D3A146A48",

"flowTuples": ["10.0.0.4,10.0.0.5,4096,443,T,O,A,1623840360,1623840420,2,200"]

}

]

}

]

},

"subscriptionId": "12345678-1234-1234-1234-123456789012",

"resourceGroupName": "MY-RESOURCE-GROUP",

"resourceProviderNamespace": "MICROSOFT.NETWORK",

"resourceType": "NETWORKSECURITYGROUPS",

"resourceName": "MY-NSG",

"nsgVersion": 2,

"numFlows": 1

}Cisco Datatypes

Parses Cisco device logs.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | Cisco ASA | cisco_asa |

| 2 | Cisco FWSM | cisco_fwsm |

| 3 | Cisco Estreamer | cisco_estreamer_data |

See some examples:

--- input ---

%ASA-1-104001: Switching to ACTIVE - Primary interface is up

--- output ---

{

"_raw": "%ASA-1-104001: Switching to ACTIVE - Primary interface is up",

"_time": 1685613600,

"datatype": "cisco_asa",

"device": "ASA-1",

"event": "104001",

"status": "Switching to ACTIVE",

"interface": "Primary"

}--- input ---

%FWSM-1-104001: Switching to ACTIVE - Primary interface is up

--- output ---

{

"_raw": "%FWSM-1-104001: Switching to ACTIVE - Primary interface is up",

"_time": 1685613600,

"datatype": "cisco_fwsm",

"device": "FWSM-1",

"event": "104001",

"status": "Switching to ACTIVE",

"interface": "Primary"

}--- input ---

rec_type=network_activity event_sec=1717334400

--- output ---

{

"_raw": "rec_type=network_activity event_sec=1717334400",

"_time": 1717334400,

"datatype": "cisco_estreamer_data",

"rec_type": "network_activity",

"event_sec": "1717334400"

}Cribl Search

Parses newline-delimited JSON (NDJSON) data.

This Datatype ruleset consists of a single rule:

| Rule name | Value of the datatype field added to the event |

|---|---|

ndjson | cribl_json |

Here’s an example:

{"timestamp":"2024-06-11T10:23:45.432Z","level":"warn","message":"Failed login attempt","userId":"e5f6g7h8","ip":"203.0.113.42","eventType":"auth"}{

"_raw": "{\"timestamp\":\"2024-06-11T10:23:45.432Z\",\"level\":\"warn\",\"message\":\"Failed login attempt\",\"userId\":\"e5f6g7h8\",\"ip\":\"203.0.113.42\",\"eventType\":\"auth\"}",

"_time": 1718101425,

"datatype": "cribl_json",

"timestamp": "2024-06-11T10:23:45.432Z",

"level": "warn",

"message": "Failed login attempt",

"userId": "e5f6g7h8",

"ip": "203.0.113.42",

"eventType": "auth"

}Cribl Search \_raw Data

Parses NDJSON-formatted logs where the actual event payload is in the _raw field.

This Datatype ruleset consists of a single rule:

| Rule name | Value of the datatype field added to the event |

|---|---|

ndjson | cribl_json_raw |

Here’s an example:

{"_raw":"{\"timestamp\":\"2024-06-11T10:24:13.123Z\",\"level\":\"info\",\"action\":\"login\",\"userId\":\"jdoe\",\"status\":\"success\"}"}{

"_raw": "{\"timestamp\":\"2024-06-11T10:24:13.123Z\",\"level\":\"info\",\"action\":\"login\",\"userId\":\"jdoe\",\"status\":\"success\"}",

"_time": 1718101453,

"datatype": "cribl_json_raw",

"timestamp": "2024-06-11T10:24:13.123Z",

"level": "info",

"action": "login",

"userId": "jdoe",

"status": "success"

}CSV Datatypes

Parses CSV-formatted logs.

This Datatype ruleset consists of a single rule:

| Rule name | Value of the datatype field added to the event |

|---|---|

csv | csv |

"id","timestamp","level","message"

1,"2023-08-01T12:00:00Z","INFO","Service started"

2,"2023-08-01T12:05:00Z","WARN","Connection slow"

3,"2023-08-01T12:10:00Z","ERROR","Service failed"[

{

"_raw": "\"id\",\"timestamp\",\"level\",\"message\"\n1,\"2023-08-01T12:00:00Z\",\"INFO\",\"Service started\"\n2,\"2023-08-01T12:05:00Z\",\"WARN\",\"Connection slow\"\n3,\"2023-08-01T12:10:00Z\",\"ERROR\",\"Service failed\"",

"_time": "2023-08-01T12:00:00Z",

"datatype": "csv",

"id": "1",

"timestamp": "2023-08-01T12:00:00Z",

"level": "INFO",

"message": "Service started"

},

{

"_raw": "\"id\",\"timestamp\",\"level\",\"message\"\n1,\"2023-08-01T12:00:00Z\",\"INFO\",\"Service started\"\n2,\"2023-08-01T12:05:00Z\",\"WARN\",\"Connection slow\"\n3,\"2023-08-01T12:10:00Z\",\"ERROR\",\"Service failed\"",

"_time": "2023-08-01T12:05:00Z",

"datatype": "csv",

"id": "2",

"timestamp": "2023-08-01T12:05:00Z",

"level": "WARN",

"message": "Connection slow"

},

{

"_raw": "\"id\",\"timestamp\",\"level\",\"message\"\n1,\"2023-08-01T12:00:00Z\",\"INFO\",\"Service started\"\n2,\"2023-08-01T12:05:00Z\",\"WARN\",\"Connection slow\"\n3,\"2023-08-01T12:10:00Z\",\"ERROR\",\"Service failed\"",

"_time": "2023-08-01T12:10:00Z",

"datatype": "csv",

"id": "3",

"timestamp": "2023-08-01T12:10:00Z",

"level": "ERROR",

"message": "Service failed"

}

]Microsoft Graph API Datatypes

Parses Microsoft Graph API logs.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | Security Alerts | microsoft_graph_securityalert |

| 2 | Security Alerts - Legacy | microsoft_graph_securityalert_legacy |

| 3 | Service Health | microsoft_graph_servicehealth |

| 4 | Service Issues | microsoft_graph_serviceissues |

| 5 | Messages | microsoft_graph_messages |

| 6 | Users | microsoft_graph_users |

| 7 | Devices | microsoft_graph_devices |

| 8 | Groups | microsoft_graph_groups |

| 9 | Applications | microsoft_graph_applications |

See some examples:

--- input ---

[

{

"id": "12345-67890",

"title": "Suspicious Activity",

"severity": "High",

"status": "NewAlert",

"lastModifiedDateTime": "2023-05-20T15:30:00Z",

"description": "User reported suspicious login."

}

]

--- output ---

{

"_raw": "{\"id\":\"12345-67890\",\"title\":\"Suspicious Activity\",\"severity\":\"High\",\"status\":\"NewAlert\",\"lastModifiedDateTime\":\"2023-05-20T15:30:00Z\",\"description\":\"User reported suspicious login.\"}",

"_time": 1684596600,

"datatype": "microsoft_graph_securityalert",

"id": "12345-67890",

"title": "Suspicious Activity",

"severity": "High",

"status": "NewAlert",

"lastModifiedDateTime": "2023-05-20T15:30:00Z",

"description": "User reported suspicious login."

}--- input ---

[

{

"id": "user-uuid-1234",

"displayName": "Alice Smith",

"mail": "alice@contoso.com",

"userPrincipalName": "alice@contoso.com",

"onPremisesLastSyncDateTime": "2023-06-01T10:00:00Z"

}

]

--- output ---

{

"_raw": "{\"id\":\"user-uuid-1234\",\"displayName\":\"Alice Smith\",\"mail\":\"alice@contoso.com\",\"userPrincipalName\":\"alice@contoso.com\",\"onPremisesLastSyncDateTime\":\"2023-06-01T10:00:00Z\"}",

"_time": 1685613600,

"datatype": "microsoft_graph_users",

"id": "user-uuid-1234",

"displayName": "Alice Smith",

"mail": "alice@contoso.com",

"userPrincipalName": "alice@contoso.com",

"onPremisesLastSyncDateTime": "2023-06-01T10:00:00Z"

}--- input ---

[

{

"id": "app-uuid-5678",

"displayName": "Payroll App",

"appId": "client-id-1234",

"createdDateTime": "2023-01-15T08:00:00Z"

}

]

--- output ---

{

"_raw": "{\"id\":\"app-uuid-5678\",\"displayName\":\"Payroll App\",\"appId\":\"client-id-1234\",\"createdDateTime\":\"2023-01-15T08:00:00Z\"}",

"_time": 1673769600,

"datatype": "microsoft_graph_applications",

"id": "app-uuid-5678",

"displayName": "Payroll App",

"appId": "client-id-1234",

"createdDateTime": "2023-01-15T08:00:00Z"

}Microsoft O365 Datatypes

Parses Microsoft 365 (formerly Office 365) logs.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | status | microsoft_office365_status |

| 2 | messages | microsoft_office365_messages |

| 3 | mgmt-activity | microsoft_office365_mgmt-activity |

| 4 | msg-trace | microsoft_office365_msg-trace |

See some examples:

---input---

{

"value": [

{

"Id": "ServiceStatus",

"Workload": "Exchange",

"StatusDisplayName": "Service degradation",

"Status": "ServiceDegradation",

"StatusTime": "2023-05-15T12:00:00Z",

"WorkloadDisplayName": "Exchange Online",

"IncidentIds": ["EX123456"]

}

]

}

---output---

{

"_raw": "{\"Id\":\"ServiceStatus\",\"Workload\":\"Exchange\",\"StatusDisplayName\":\"Service degradation\",\"Status\":\"ServiceDegradation\",\"StatusTime\":\"2023-05-15T12:00:00Z\",\"WorkloadDisplayName\":\"Exchange Online\",\"IncidentIds\":[\"EX123456\"]}",

"_time": 1684152000,

"datatype": "microsoft_office365_status",

"Id": "ServiceStatus",

"Workload": "Exchange",

"StatusDisplayName": "Service degradation",

"Status": "ServiceDegradation",

"StatusTime": "2023-05-15T12:00:00Z",

"WorkloadDisplayName": "Exchange Online",

"IncidentIds": [

"EX123456"

]

}---input---

{

"value": [

{

"Id": "MC123456",

"Title": "New Feature: SharePoint",

"MessageType": "MessageCenter",

"lastModifiedDateTime": "2023-06-01T10:00:00Z",

"ActionType": "ActionRequired"

}

]

}

---output---

{

"_raw": "{\"Id\":\"MC123456\",\"Title\":\"New Feature: SharePoint\",\"MessageType\":\"MessageCenter\",\"lastModifiedDateTime\":\"2023-06-01T10:00:00Z\",\"ActionType\":\"ActionRequired\"}",

"_time": 1685613600,

"datatype": "microsoft_office365_messages",

"Id": "MC123456",

"Title": "New Feature: SharePoint",

"MessageType": "MessageCenter",

"lastModifiedDateTime": "2023-06-01T10:00:00Z",

"ActionType": "ActionRequired"

}---input---

[

{

"CreationTime": "2024-11-15T14:23:45Z",

"Id": "f9caa9e1-7b2b-4f1c-a0b3-6c3f2c9b828a",

"Operation": "FileAccessed",

"Workload": "SharePoint",

"UserId": "john.smith@contoso.com",

"ObjectId": "https://contoso.sharepoint.com/sites/Finance/Budget.xlsx"

}

]

---output---

{

"_raw": "{\"CreationTime\":\"2024-11-15T14:23:45Z\",\"Id\":\"f9caa9e1-7b2b-4f1c-a0b3-6c3f2c9b828a\",\"Operation\":\"FileAccessed\",\"Workload\":\"SharePoint\",\"UserId\":\"john.smith@contoso.com\",\"ObjectId\":\"https://contoso.sharepoint.com/sites/Finance/Budget.xlsx\"}",

"_time": 1731680625,

"datatype": "microsoft_office365_mgmt-activity",

"CreationTime": "2024-11-15T14:23:45Z",

"Id": "f9caa9e1-7b2b-4f1c-a0b3-6c3f2c9b828a",

"Operation": "FileAccessed",

"Workload": "SharePoint",

"UserId": "john.smith@contoso.com",

"ObjectId": "https://contoso.sharepoint.com/sites/Finance/Budget.xlsx"

}---input---

{

"value": [

{

"Received": "2023-07-20T08:30:00.123456",

"SenderAddress": "sender@example.com",

"RecipientAddress": "recipient@contoso.com",

"Subject": "Project Update",

"Status": "Delivered"

}

]

}

---output---

{

"_raw": "{\"Received\":\"2023-07-20T08:30:00.123456\",\"SenderAddress\":\"sender@example.com\",\"RecipientAddress\":\"recipient@contoso.com\",\"Subject\":\"Project Update\",\"Status\":\"Delivered\"}",

"_time": 1689841800.123456,

"datatype": "microsoft_office365_msg-trace",

"Received": "2023-07-20T08:30:00.123456",

"SenderAddress": "sender@example.com",

"RecipientAddress": "recipient@contoso.com",

"Subject": "Project Update",

"Status": "Delivered"

}Microsoft Windows Datatypes

Parses Windows Event Log formats (classic and XML).

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | Windows Event Log Classic | windows_event_classic |

| 2 | Windows XML Events | windows_event_xml |

See some examples:

---input---

06/15/2023 07:20:51 PM

LogName: Security

Source: Microsoft-Windows-Security-Auditing

EventID: 4624

Level: Information

User: N/A

Computer: WIN-7PC

Description:

An account was successfully logged on.

---output---

{

"_raw": "06/15/2023 07:20:51 PM LogName: Security Source: Microsoft-Windows-Security-Auditing EventID: 4624 Level: Information User: N/A Computer: WIN-7PC Description: An account was successfully logged on.",

"_time": 1718511651,

"datatype": "windows_event_classic",

"log_name": "Security",

"source": "Microsoft-Windows-Security-Auditing",

"event_id": "4624",

"level": "Information",

"user": "N/A",

"computer": "WIN-7PC",

"description": "An account was successfully logged on."

}---input---

<Event xmlns='http://schemas.microsoft.com/win/2004/08/events/event'>

<System>

<Provider Name='Microsoft-Windows-Security-Auditing'/>

<EventID>4624</EventID>

<TimeCreated SystemTime='2023-06-15T19:20:51.000000000Z'/>

<Channel>Security</Channel>

<Computer>WIN-7PC</Computer>

</System>

<EventData>

<Data Name='TargetUserName'>User1</Data>

<Data Name='TargetDomainName'>WIN-7PC</Data>

<Data Name='LogonType'>2</Data>

</EventData>

</Event>

---output---

{

"_raw": "<Event xmlns='http://schemas.microsoft.com/win/2004/08/events/event'><System><Provider Name='Microsoft-Windows-Security-Auditing'/><EventID>4624</EventID><TimeCreated SystemTime='2023-06-15T19:20:51.000000000Z'/><Channel>Security</Channel><Computer>WIN-7PC</Computer></System><EventData><Data Name='TargetUserName'>User1</Data><Data Name='TargetDomainName'>WIN-7PC</Data><Data Name='LogonType'>2</Data></EventData></Event>",

"_time": 1686856851,

"datatype": "windows_event_xml",

"EventID": "4624",

"ProviderName": "Microsoft-Windows-Security-Auditing",

"Channel": "Security",

"Computer": "WIN-7PC",

"TargetUserName": "User1",

"TargetDomainName": "WIN-7PC",

"LogonType": "2"

}OCSF Datatypes

Parses logs in the Open Cybersecurity Schema Framework (OCSF) format.

This Datatype ruleset consists of a single rule:

| Rule name | Value of the datatype field added to the event |

|---|---|

ndjson | Value of the source event category_name field.For example, for "category_name": "Network Activity",Cribl Search sets the datatype field to "Network Activity" |

Here’s an example:

{

"category_uid": 1,

"category_name": "Network Activity",

"class_uid": 100,

"class_name": "Network Flow",

"activity_id": 1001,

"activity_name": "Connection Allowed",

"metadata": {

"version": "1.1.0",

"product": "Example Product",

"vendor_name": "Example Vendor",

"profile": "network_activity"

},

"time": 1718000000000,

"src_endpoint": {

"ip": "192.168.0.10",

"port": 44321

},

"dst_endpoint": {

"ip": "93.184.216.34",

"port": 443

},

"protocol": "TCP",

"duration": 5042,

"bytes_in": 23456,

"bytes_out": 11432,

"outcome": "SUCCESS"

}{

"_raw": "{\"category_uid\":1,\"category_name\":\"Network Activity\",\"class_uid\":100,\"class_name\":\"Network Flow\",\"activity_id\":1001,\"activity_name\":\"Connection Allowed\",\"metadata\":{\"version\":\"1.1.0\",\"product\":\"Example Product\",\"vendor_name\":\"Example Vendor\",\"profile\":\"network_activity\"},\"time\":1718000000000,\"src_endpoint\":{\"ip\":\"192.168.0.10\",\"port\":44321},\"dst_endpoint\":{\"ip\":\"93.184.216.34\",\"port\":443},\"protocol\":\"TCP\",\"duration\":5042,\"bytes_in\":23456,\"bytes_out\":11432,\"outcome\":\"SUCCESS\"}",

"_time": 1718000000,

"datatype": "Network Activity",

"category_uid": 1,

"category_name": "Network Activity",

"class_uid": 100,

"class_name": "Network Flow",

"activity_id": 1001,

"activity_name": "Connection Allowed",

"metadata": {

"version": "1.1.0",

"product": "Example Product",

"vendor_name": "Example Vendor",

"profile": "network_activity"

},

"time": 1718000000000,

"src_endpoint": {

"ip": "192.168.0.10",

"port": 44321

},

"dst_endpoint": {

"ip": "93.184.216.34",

"port": 443

},

"protocol": "TCP",

"duration": 5042,

"bytes_in": 23456,

"bytes_out": 11432,

"outcome": "SUCCESS"

}Palo Alto Datatypes

Parses Palo Alto firewall logs.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | Palo Alto Traffic | pan_traffic |

| 2 | Palo Alto Threat | pan_threat |

| 3 | Palo Alto System | pan_system |

| 4 | Palo Alto Config | pan_config |

See some examples:

--- input ---

{

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "TRAFFIC",

"threat_content_type": "None",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"source_ip": "192.168.0.10",

"destination_ip": "93.184.216.34"

}

---output ---

{

"_raw": "{\"receive_time\":\"2023-05-15T12:00:00.0000000Z\",\"serial_number\":\"1234567890\",\"type\":\"TRAFFIC\",\"threat_content_type\":\"None\",\"generated_time\":\"2023-05-15T12:00:00.0000000Z\",\"source_ip\":\"192.168.0.10\",\"destination_ip\":\"93.184.216.34\"}",

"_time": 1718000000,

"datatype": "pan_traffic",

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "TRAFFIC",

"threat_content_type": "None",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"source_ip": "192.168.0.10",

"destination_ip": "93.184.216.34"

}--- input ---

{

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "THREAT",

"threat_content_type": "virus",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"source_ip": "10.0.0.1",

"destination_ip": "8.8.8.8",

"source_port": 37841,

"destination_port": 80,

"nat_source_ip": "192.0.2.10",

"nat_destination_ip": "8.8.8.8",

"rule": "Outbound-Virus-Scan",

"threat_id": "12345",

"category": "malware",

"severity": "high",

"direction": "outbound",

"action": "blocked",

"threat_name": "Eicar-Test-File",

"file_name": "eicar.com",

"app": "web-browsing"

}

---output---

{

"_raw": "{\"receive_time\":\"2023-05-15T12:00:00.0000000Z\",\"serial_number\":\"1234567890\",\"type\":\"THREAT\",\"threat_content_type\":\"virus\",\"generated_time\":\"2023-05-15T12:00:00.0000000Z\",\"source_ip\":\"10.0.0.1\",\"destination_ip\":\"8.8.8.8\",\"source_port\":37841,\"destination_port\":80,\"nat_source_ip\":\"192.0.2.10\",\"nat_destination_ip\":\"8.8.8.8\",\"rule\":\"Outbound-Virus-Scan\",\"threat_id\":\"12345\",\"category\":\"malware\",\"severity\":\"high\",\"direction\":\"outbound\",\"action\":\"blocked\",\"threat_name\":\"Eicar-Test-File\",\"file_name\":\"eicar.com\",\"app\":\"web-browsing\"}",

"_time": 1718000000,

"datatype": "pan_threat",

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "THREAT",

"threat_content_type": "virus",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"source_ip": "10.0.0.1",

"destination_ip": "8.8.8.8",

"source_port": 37841,

"destination_port": 80,

"nat_source_ip": "192.0.2.10",

"nat_destination_ip": "8.8.8.8",

"rule": "Outbound-Virus-Scan",

"threat_id": "12345",

"category": "malware",

"severity": "high",

"direction": "outbound",

"action": "blocked",

"threat_name": "Eicar-Test-File",

"file_name": "eicar.com",

"app": "web-browsing"

}--- input ---

{

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "SYSTEM",

"threat_content_type": "None",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"source_ip": "192.168.0.10",

"destination_ip": "93.184.216.34"

}

---output---

{

"_raw": "{\"receive_time\":\"2023-05-15T12:00:00.0000000Z\",\"serial_number\":\"1234567890\",\"type\":\"SYSTEM\",\"threat_content_type\":\"None\",\"generated_time\":\"2023-05-15T12:00:00.0000000Z\",\"source_ip\":\"192.168.0.10\",\"destination_ip\":\"93.184.216.34\"}",

"_time": 1718000000,

"datatype": "pan_system",

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "SYSTEM",

"threat_content_type": "None",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"source_ip": "192.168.0.10",

"destination_ip": "93.184.216.34"

}--- input ---

{

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "CONFIG",

"threat_content_type": "None",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"admin": "admin",

"client": "Web",

"config_ver": "42",

"cmd": "set",

"result": "Succeeded",

"before_change_detail": "none",

"after_change_detail": "commit status: completed",

"source_ip": "192.168.0.10",

"destination_ip": "93.184.216.34"

}

---output---

{

"_raw": "{\"receive_time\":\"2023-05-15T12:00:00.0000000Z\",\"serial_number\":\"1234567890\",\"type\":\"CONFIG\",\"threat_content_type\":\"None\",\"generated_time\":\"2023-05-15T12:00:00.0000000Z\",\"admin\":\"admin\",\"client\":\"Web\",\"config_ver\":\"42\",\"cmd\":\"set\",\"result\":\"Succeeded\",\"before_change_detail\":\"none\",\"after_change_detail\":\"commit status: completed\",\"source_ip\":\"192.168.0.10\",\"destination_ip\":\"93.184.216.34\"}",

"_time": 1718000000,

"datatype": "pan_config",

"receive_time": "2023-05-15T12:00:00.0000000Z",

"serial_number": "1234567890",

"type": "CONFIG",

"threat_content_type": "None",

"generated_time": "2023-05-15T12:00:00.0000000Z",

"admin": "admin",

"client": "Web",

"config_ver": "42",

"cmd": "set",

"result": "Succeeded",

"before_change_detail": "none",

"after_change_detail": "commit status: completed",

"source_ip": "192.168.0.10",

"destination_ip": "93.184.216.34"

}Syslog Datatypes

Parses syslog messages.

This Datatype ruleset consists of the following rules, evaluated top-down:

| Rule name | Value of the datatype field added to the event | |

|---|---|---|

| 1 | RFC3164 | syslog |

| 2 | RFC5424 | syslog |

See some examples:

---input---

<34>Oct 11 22:14:15 mymachine su: 'su root' failed for user on /dev/pts/3

---output---

{

"_raw": "<34>Oct 11 22:14:15 mymachine su: 'su root' failed for user on /dev/pts/3",

"_time": 436917255,

"datatype": "syslog",

"priority": 34,

"timestamp": "Oct 11 22:14:15",

"host": "mymachine",

"app_name": "su",

"message": "'su root' failed for user on /dev/pts/3"

}---input---

<165>1 2022-12-17T23:56:10.123Z mymachine appname 12345 ID47 [exampleSDID@32473 iut="3" eventSource="Application" eventID="1011"] An application event log entry

---output---

{

"_raw": "<165>1 2022-12-17T23:56:10.123Z mymachine appname 12345 ID47 [exampleSDID@32473 iut=\"3\" eventSource=\"Application\" eventID=\"1011\"] An application event log entry",

"_time": 1671321370,

"datatype": "syslog",

"priority": 165,

"version": 1,

"timestamp": "2022-12-17T23:56:10.123Z",

"host": "mymachine",

"app_name": "appname",

"procid": "12345",

"msgid": "ID47",

"structured_data": {

"exampleSDID@32473": {

"iut": "3",

"eventSource": "Application",

"eventID": "1011"

}

},

"message": "An application event log entry"

}Zeek Datatypes

Parses Zeek logs.

This Datatype ruleset consists of a single rule:

| Rule name | Value of the datatype field added to the event |

|---|---|

| Zeek | zeek |

Here’s an example:

192.168.1.100 443 192.168.1.50 53212 tcp ssl 1532476123.119112 C12nJ1nN6uC5fC3qld 664 589 1 F ShADadf 0 0 0.000058{

"_raw": "192.168.1.100\t443\t192.168.1.50\t53212\ttcp\tssl\t1532476123.119112\tC12nJ1nN6uC5fC3qld\t664\t589\t1\tF\tShADadf\t0\t0\t0.000058",

"_time": 1532476123,

"datatype": "zeek",

"id_orig_h": "192.168.1.100",

"id_orig_p": 443,

"id_resp_h": "192.168.1.50",

"id_resp_p": 53212,

"proto": "tcp",

"service": "ssl",

"ts": 1532476123.119112,

"uid": "C12nJ1nN6uC5fC3qld",

"orig_bytes": 664,

"resp_bytes": 589,

"conn_state": "F",

"history": "ShADadf",

"missed_bytes": 0,

"orig_pkts": 0,

"orig_ip_bytes": 0,

"duration": 0.000058

}