These docs are for Cribl Stream 4.4 and are no longer actively maintained.

See the latest version (4.16).

Sources

Cribl Stream can receive continuous data input from various Sources, including Splunk, HTTP, Elastic Beats, Kinesis, Kafka, TCP JSON, and many others. Sources can receive data from either IPv4 or IPv6 addresses.

COLLECTOR Sources

Collectors, the top group of Sources in Cribl Stream’s UI, are designed to ingest data intermittently - in on-demand bursts (“ad hoc collection”), or on preset schedules, or by “replaying” data from local or remote stores.

These Sources can ingest data only if a Leader is active:

- Amazon S3

- Azure Blob Storage

- Database

- File System/NFS

- Google Cloud Storage

- Health Check

- REST/API Endpoint

- Script

- Splunk Search

For background and instructions on using Collectors, see:

Check out the example REST Collector configurations in Cribl’s Collector Templates repository. For many popular Collectors, the repo provides configurations (with companion Event Breakers, and event samples in some cases) that you can import into your Cribl Stream instance, saving the time you’d have spent building them yourself.

PUSH Sources

Supported data Sources that send to Cribl Stream.

In the absence of an active Leader, these Sources can generally continue data ingestion. However, some Sources may experience functional limitations during prolonged Leader unavailability. See the respective Source documentation for details.

- Amazon Kinesis Firehose

- AppScope

- Datadog Agent

- Elasticsearch API

- Grafana

- HTTP/S (Bulk API)

- Raw HTTP/S

- Loki

- Metrics

- OpenTelemetry (OTel)

- Prometheus Remote Write

- SNMP Trap

- Splunk HEC

- Splunk TCP

- Syslog

- TCP JSON

- TCP (Raw)

- UDP (Raw)

- Windows Event Forwarder

Data from these Sources is normally sent to a set of Cribl Stream Workers through a load balancer. Some Sources, such as Splunk forwarders, have native load-balancing capabilities, so you should point these directly at Cribl Stream.

PULL Sources

Supported Sources that Cribl Stream fetches data from.

- Amazon Kinesis Data Streams (HTTPS only) - requires active Leader

- Amazon SQS (HTTPS only)

- Amazon S3 (HTTPS only)

- Google Cloud Pub/Sub (HTTPS only)

- Azure Event Hubs (TCP)

- Azure Blob Storage (HTTPS only)

- Confluent Cloud (TCP)

- CrowdStrike FDR (HTTPS only)

- Office 365 Services (HTTPS only) - requires active Leader for job management

- Office 365 Activity (HTTPS only) - requires active Leader for job management

- Office 365 Message Trace (HTTPS only) - requires active Leader for job management

- Prometheus Scraper (HTTP/S) - requires active Leader for job management

- Kafka (TCP)

- Amazon MSK (TCP)

- Splunk Search (HTTP/S) - requires active Leader for job management

System Sources

Sources that supply information generated by Cribl Stream about itself, or from files that it monitors; or, that move data among Workers within your Cribl deployment.

Internal Sources

Similar to System Sources, Internal Sources also supply information generated by Cribl Stream about itself. Hoever, unlike System Sources, they don’t count towards the license usage.

Configuring and Managing Sources

For each Source type, you can create multiple definitions, depending on your requirements.

From the top nav, click Manage, then select a Worker Group to configure. Next, you have two options:

To configure via the graphical QuickConnect UI, click Routing > QuickConnect (Stream) or Collect (Edge). Next, click Add Source at left. From the resulting drawer’s tiles, select the desired Source. Next, click either Add Destination or (if displayed) Select Existing.

Or, to configure via the Routing UI, click Data > Sources (Stream) or More > Sources (Edge). From the resulting page’s tiles or left nav, select the desired Source. Next, click New Source to open a New Source modal.

To edit any Source’s definition in a JSON text editor, click Manage as JSON at the bottom of the New Source modal, or on the Configure tab when editing an existing Source. You can directly edit multiple values, and you can use the Import and Export buttons to copy and modify existing Source configurations as .json files.

When JSON configuration contains sensitive information, it is redacted during export.

Capturing Source Data

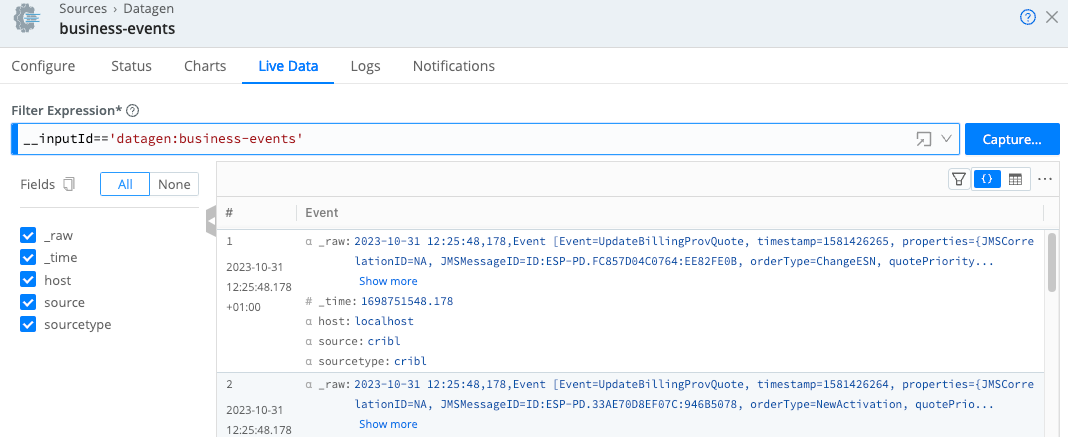

To capture data from a single enabled Source, you can bypass the Preview pane, and instead capture directly from a Manage Sources page. Just click the Live button beside the Source you want to capture.

In order to capture live data, you must have Worker Nodes registered to the Worker Group for which you’re viewing events. You can view registered Worker Nodes from the Status tab in the Source.

You can also start an immediate capture from within an enabled Source’s config modal, by clicking the modal’s Live Data tab.

Preconfigured Sources

To accelerate your setup, Cribl Stream ships with several common Sources configured for typical listening ports, but not switched on. Open, clone (if desired), modify, and enable any of these preconfigured Sources to get started quickly:

- Syslog - TCP Port 9514, UDP Port 9514

- Splunk TCP - Port 9997

- Splunk HEC - Port 8088

- TCP JSON - Port 10070

- TCP - Port 10060

- HTTP - Port 10080

- Elasticsearch API - Port 9200

- SNMP Trap - Port 9162 (preconfigured only on on-prem setups)

- OpenTelemetry - Port 4317 (preconfigured only on Cribl.Cloud)

System and Internal Sources:

- Cribl Internal > CriblLogs (preconfigured only on on-prem setups)

- Cribl Internal > CriblMetrics

- Appscope

- Cribl HTTP - Port 10200 (preconfigured only on distributed setups)

- Cribl TCP - Port 10300 (preconfigured only on distributed setups)

- System Metrics (preconfigured only on on-prem setups)

- System State (preconfigured only on on-prem setups)

- Journal files (preconfigured only on on-prem setups)

In a preconfigured Source’s configuration, never change the Address field, even though the UI shows an editable field. If you change these fields’ value, the Source will not work as expected.

After you create a Source and deploy the changes, it can take a few minutes for the Source to become available in Cribl.Cloud’s load balancer. However, Cribl Stream will open the port, and will be able to receive data, immediately.

Cribl.Cloud Ports and TLS Configurations

Cribl.Cloud provides several data Sources and ports already enabled for you,

plus 11 additional TCP ports (20000-20010) that you can use to add and configure more Sources.

The Cribl.Cloud portal’s Data Sources tab displays the pre-enabled Sources, their endpoints, the reserved and available ports, and protocol details. For each existing Source listed here, Cribl recommends using the preconfigured endpoint and port to send data into Cribl Stream.

Cribl HTTP and Cribl TCP Sources/Destinations

Use the Cribl HTTP Destination and Source, and/or the Cribl TCP Destination and Source, to relay data between Worker Nodes connected to the same Leader. This traffic does not count against your ingestion quota, so this routing prevents double-billing. (For related details, see Exemptions from License Quotas.)

Backpressure Behavior and Persistent Queues

By default, a Cribl Stream Source will respond to a backpressure situation - a situation where its in-memory buffer is overwhelmed with data, and/or downstream Destinations/receivers are unavailable - by blocking incoming data. The Source will refuse to accept new data until it can flush its buffer.

This will propagate block signals back to the sender, if it supports backpressure. Note that UDP senders (including SNMP Traps and some syslog senders) do not provide this support. So here, new events will simply be dropped (discarded) until the Source can process them.

Persistent Queues

Push Sources’ config modals provide a Persistent Queue Settings option to minimize loss of inbound streaming data. Here, the Source will write data to disk until its in-memory buffer recovers. Then, it will drain the disk-queued data in FIFO (first in, first out) order.

When you enable Source PQ, you can choose between two trigger conditions: Smart Mode will engage PQ upon backpressure from Destinations, whereas Always On Mode will use PQ as a buffer for all events.

For details about the PQ option and these modes, see Persistent Queues.

Persistent Queues, when engaged, slow down data throughput somewhat. It is redundant to enable PQ on a Source whose upstream sender is configured to safeguard events in its own disk buffer.

Other Backpressure Options

The S3 Source provides a configurable Advanced Settings > Socket timeout option, to prevent data loss (partial downloading of logs) during backpressure delays.

Diagnosing Backpressure Errors

When backpressure affects HTTP Sources (Splunk HEC, HTTP/S, Raw HTTP/S, and Kinesis Firehose), Cribl Stream internal logs will show a 503 error code.