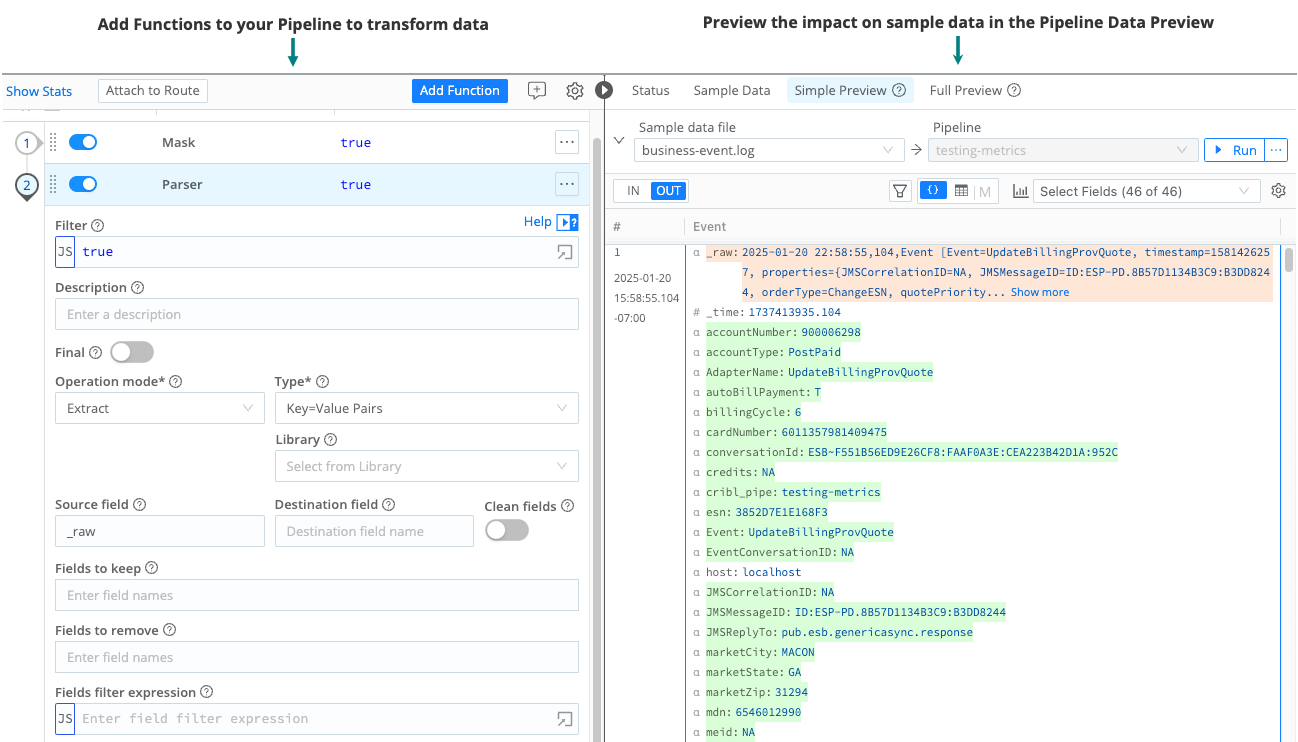

Data Preview

You can use the Data Preview tool to preview, test, and validate the Functions you add to a Pipeline or Route. It helps ensure that your Pipeline and Function configurations produce the expected output, giving you confidence that Cribl Stream processes your data as intended.

The Data Preview tool works by processing a set of sample events and passing them through the Pipeline. Then, it displays the inbound and outbound results in a dedicated pane. Whenever you modify, add, or remove a Function, the output updates instantly to reflect your changes.

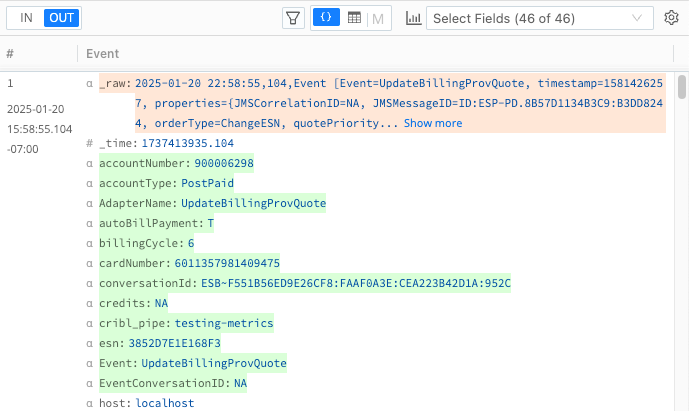

For example, suppose you add a Parser Function to your Pipeline to convert raw, unstructured data into structured key-value pairs. After parsing, you may choose to promote these key-value pairs to the field level to enhance data processing and analysis. To verify these changes meet your expectations, you can use the OUT tab in the Data Preview pane to review the transformed data.

In addition to previewing data, the Data Preview offers:

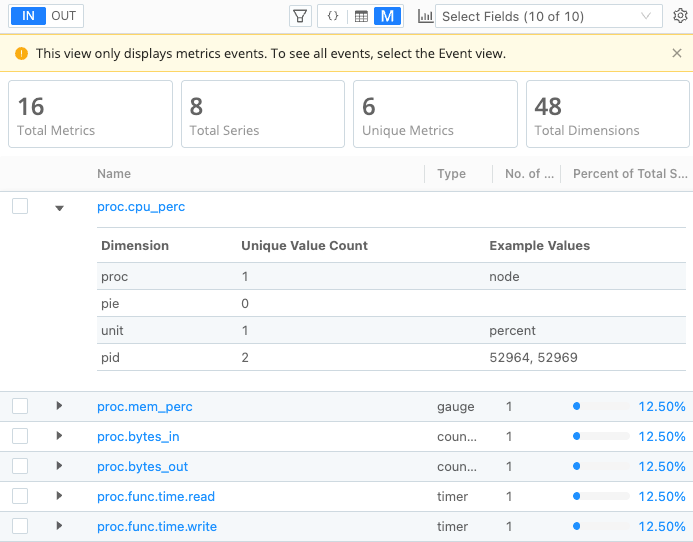

- Metrics View: This metrics-first view in the Data Preview pane automatically detects and visualizes metrics in your sample data, organizing them by metric name for a more intuitive experience. Designed for metrics-heavy datasets, this view provides insights into key metrics, dimensions, and time series. It helps you identify high-cardinality data (datasets with a large number of uncommon or unique values), enabling you to make informed decisions about data processing before forwarding it to potentially costly downstream receivers. See Metrics View and Manage Metrics and High Cardinality for more information.

- Pipeline diagnostics: Includes status updates and summary throughput statistics to assess the health of the Pipeline and its Functions. See Pipeline Diagnostics and Statistics for more details.

Data preview on the Simple Preview tab is limited to 10 MB of data. This cap prevents excessive data processing and safeguards system stability. If the dataset is more than 10 MB when a simple preview runs, Cribl Stream will limit the preview to 10 MB and stop processing data beyond that limit.

The Get Started With Cribl Stream tutorial provides hands-on experience with the Pipeline Data Preview if you would like to learn more about this tool through personal experimentation and exploration.

How to Use the Data Preview Tool

To use the Data Preview tool:

Create or import some sample data. See Add Sample Data for information about different methods to generate sample data.

Then, use the different Data Preview views to test and validate the changes you make in your Pipeline. See Explore Data and Validate Pipelines with Different Views to learn about the different views you can apply to your data.

Add Sample Data

To use the Data Preview tool, your Pipeline first needs sample data to work with. You can get sample data using a few methods:

- Upload a sample data file

- Copy data from a clipboard

- Import data from Cribl Edge Nodes

- Capture new sample data

- Capture live data from a Single Source or Destination

- Use a datagen or a sample data file provided by Cribl

When deciding which method to use, note that:

- The Import Data options work with content that needs to be broken into events, meaning it needs Event Breakers.

- The Capture Data options work with events only.

Cribl Event Breakers are regular expressions that tell Cribl Stream how to break the file or pasted content into events. Breaking occurs at the start of the match. Cribl Stream ships with several common breaker patterns out of the box, but you can also configure custom breakers. The UI here is interactive, and you can iterate until you find the exact pattern.

If you notice fragmented events, check whether Cribl Stream has added a __timeoutFlush internal field to them. This diagnostic field’s presence indicates that Cribl Stream flushed the events because the Event Breaker buffer timed out while processing them. These timeouts can be due to large incoming events, backpressure, or other causes. See Event Model: Internal Fields for more information.

After you select Save as Sample File in any of the flows below, the new sample file is available from the Sample Data tab in the Data Preview pane.

Sample File Metadata

Whenever you create or edit a sample file, you work with a common set of metadata fields:

File name: The display name for the sample file. Choose a name that makes the sample easy to recognize in the Sample Data and Samples tabs.

Description: An optional, free-form description that explains what the sample represents (source system, time range, scenario, and so on).

Expiration time: A time-to-live (TTL), in hours, for the sample file. Each time you use the sample, for example in Data Preview, Pipeline testing, or when creating a datagen, the TTL resets. When a sample reaches its expiration without being used, Cribl Stream silently removes the sample and its backing file. Leave this field blank if you do not want the sample to expire automatically.

- In deployments that use Git-backed configuration bundles, expiration only affects the live configuration on disk. If you commit while the sample exists, that commit continues to contain the sample. When the sample later expires, its removal is just another change that you can commit in a later revision. If the sample expires before you commit, it is cleaned up without ever being recorded in Git.

Tags: Optional labels that you can use to organize and search for samples (for example, by source, environment, or use case).

Upload a Sample Data File

To upload a sample data file:

- In the sidebar, select Worker Groups, then select a Worker Group. On the Worker Groups submenu, select Processing, then Pipelines.

- Select the Sample Data tab in the Data Preview pane. Select Import Data.

- In the Import Sample Data modal, drag and drop your sample file or select Upload to navigate to the file on your computer.

- Select an appropriate option from the Event Breaker Settings menu.

- Configure the sample’s metadata (File name, Description, Expiration time, and Tags) as described in Sample File Metadata.

- Add or drop fields as needed.

Copy Data from a Clipboard

To copy sample data from a clipboard:

In the sidebar, select Worker Groups, then select a Worker Group. On the Worker Groups submenu, select Processing, then Pipelines.

Select the Sample Data tab in the Data Preview pane. Select Import Data.

In the Import Sample Data modal, paste data from your computer clipboard into the provided field.

Import Sample Data modal Select an appropriate option from the Event Breaker Settings menu.

Configure the sample’s metadata (File name, Description, Expiration time, and Tags) as described in Sample File Metadata.

Add or drop fields as needed.

Import Data from Cribl Edge Nodes

To import data from Cribl Edge Nodes, you must have at least one working Edge Node. To upload data from a file on an Edge Node:

- In the sidebar, select Worker Groups, then select a Worker Group. On the Worker Groups submenu, select Processing, then Pipelines.

- Select the Sample Data tab in the Data Preview pane. Select Import Data.

- In the Import Sample Data modal, select Edge Data and navigate to the Edge Node that has the stored file.

- In Manual mode, use the available filters to narrow the results:

- Path: The location from which to discover files.

- Allowlist: Supports wildcard syntax and supports the exclamation mark (

!) for negation. - Max depth: Sets which layers of files to return highlighted in bold typeface. By default, this field is empty, which implicitly specifies

0. This default boldfaces only the top-level files within the Path.

- Once you find the file you want, select its name to add its contents to the Add Sample Data modal.

- Select an appropriate option from the Event Breaker Settings menu.

- Configure the sample’s metadata (File name, Description, Expiration time, and Tags) as described in Sample File Metadata.

- Add or drop fields as needed.

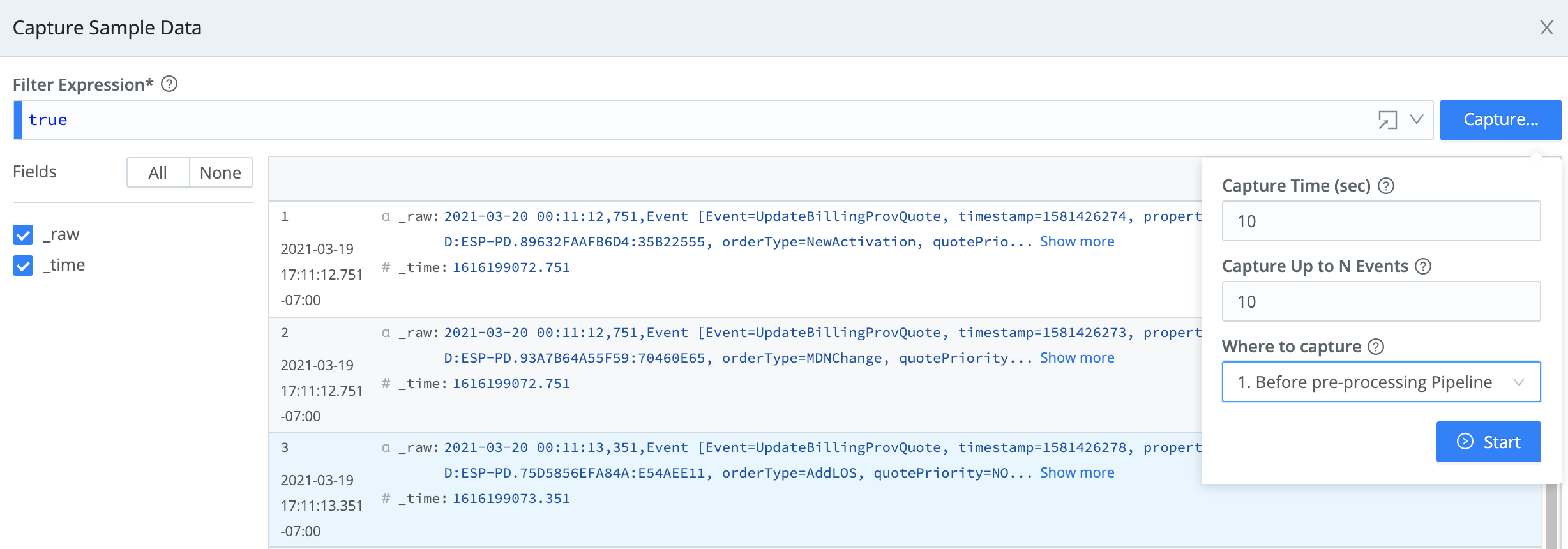

Capture New Sample Data

The Capture Data mode does not require event breaking. To capture live data, you must have Worker Nodes registered to the Worker Group for which you’re viewing events. You can view registered Worker Nodes from the Status tab in the Source.

To capture new sample data:

Worker Group, Data

- In the sidebar, select Worker Groups, then select a Worker Group. On the Worker Groups submenu, select Processing, then Pipelines.

- Select the Sample Data tab in the Data Preview pane. Select Capture Data.

- Select Capture. Accept the default settings and select Start.

- When the modal finishes populating with events, select Save as Sample File. In the sample file settings, configure the sample’s metadata (File name, Description, Expiration time, and Tags) as described in Sample File Metadata.

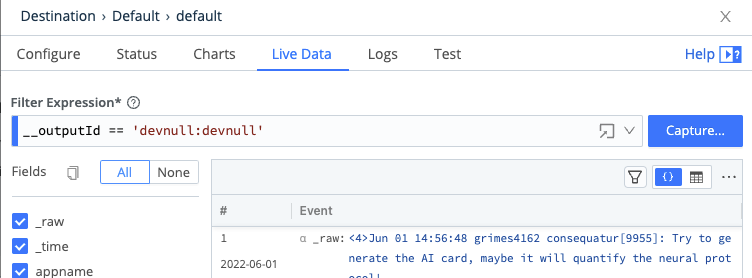

Capture Live Data from a Single Source or Destination

To capture data from a single enabled Source or Destination, it’s fastest to use the Sources or Destinations pages instead of the Preview pane.

To capture live data:

In the sidebar, select Worker Groups, then select a Worker Group. On the Worker Groups submenu, select Data, then Sources or Destinations.

Select Live on the Source or Destination configuration row to initiate a live capture.

Live button on a Source Select Capture. Accept or modify the default settings as needed and select Start.

When the modal finishes populating with events, select Save as Sample File. In the sample file settings, configure the sample’s metadata (File name, Description, Expiration time, and Tags) as described in Sample File Metadata.

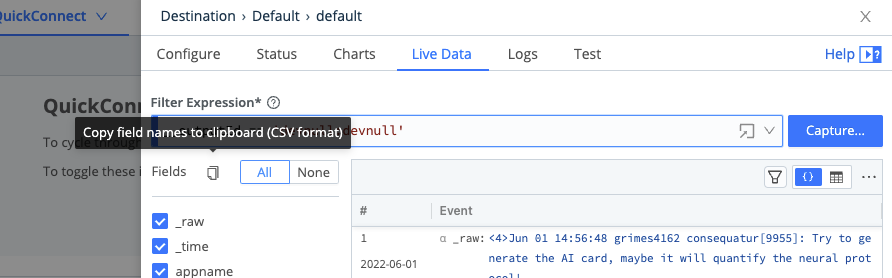

Alternatively, you can start an immediate capture from within an enabled Source’s or Destination’s configuration modal, by selecting the modal’s Live Data tab.

You can also use the Fields selector in the Live Data is a Copy button, which enables you to copy the field names to the clipboard in CSV format. The Logs tab also provides this copy button.

Use a Datagen or Sample Data File from Cribl

You can use the Cribl-provided datagens or sample data files to test and validate your data. See Using Datagens for more information.

Modify Sample Files

To modify existing sample files:

In the right Preview pane, select the Samples tab.

Hover over the file name you want to modify to show an edit (pencil) button next to the file name.

Select that button to open the modal shown below. It provides options to edit, clone, or delete the sample, save it as a datagen Source, or modify its metadata (File name, Description, Expiration time, and Tags). See Sample File Metadata for details on these fields.

Options for modifying a sample To make changes to the sample, select the modal’s Edit Sample button. This opens the Edit Sample modal shown below, exposing the sample’s raw data.

Edit the raw data as desired.

Select Update Sample to resave the modified sample, or select Save as New Sample to give the modified version a new name.

Troubleshoot Sample Data

This section describes some strategies for managing potential issues with sample data that you might encounter.

Sample Sizes Are Too Large

To prevent in-memory samples from getting unreasonably large, Cribl Stream constrains all data samples by a limit set as follows:

- On a single instance at Settings > General Settings > Limits > Storage > Sample size limit.

- On distributed Worker Groups (both Cribl-managed and customer-managed) at Group Settings > General Settings > Limits > Storage > Sample size limit.

In each location, the default limit is 256 KB. You can adjust this down or up to a maximum of 3 MB.

Integer Values Are Too Large

The JavaScript implementation in @Cribl Stream can safely represent integers only up to the Number.MAX_SAFE_INTEGER constant of about 9 quadrillion (precisely, {2^53}-1). Data Preview will round down any integer larger than this, and trailing zeroes might indicate such rounding.

Change Field Displays

In the Capture Data and Live Data modals, use the Fields sidebar to streamline how events display. You can toggle among All fields, None (to reset the display), and check boxes that enable or disable individual fields by name.

Within the right Preview pane, each field’s type is indicated by one of these leading symbols:

| Symbol | Meaning |

|---|---|

| α | string |

| # | numeric |

| b | boolean |

| m | metric |

| {} | JSON object |

| [] | array |

On JSON objects and arrays:

| Symbol | Meaning |

|---|---|

| + | expandable |

| - | collapsible |

Explore Data and Validate Pipelines with Different Views

Once you have created or imported sample data for your Pipeline, you can explore and analyze it using the various views available in the Data Preview pane. These views allow you to preview and test changes in real time, helping ensure that your data processing Functions produce the expected results. By leveraging the Data Preview, you can confidently validate that your Pipeline transformations align with your intended outcomes.

Simple or Full Previews

Select Simple Preview or Full Preview beside a file name to display its events in the Preview pane:

- Simple Preview: View events on either the IN or the OUT (processed) side of a single Pipeline.

- Full Preview: View events on the OUT side of either the processing or post-processing Pipeline. Selecting this option expands the Preview pane’s upper controls to include an Exit Point drop-down, where you make this choice.

Before and After Views

The Data Preview pane offers two time-based display options for previewing sample data:

- The IN tab displays samples as they appear on the way in to the Pipeline, before data processing.

- The OUT tab displays the same information, but shows the sample data as it will appear on way out of the Pipeline after the data processing. Use the Select Fields drop-down menu to control which fields the OUT tab displays.

In most cases, you will typically use the OUT tab to preview, test, and validate your Pipeline.

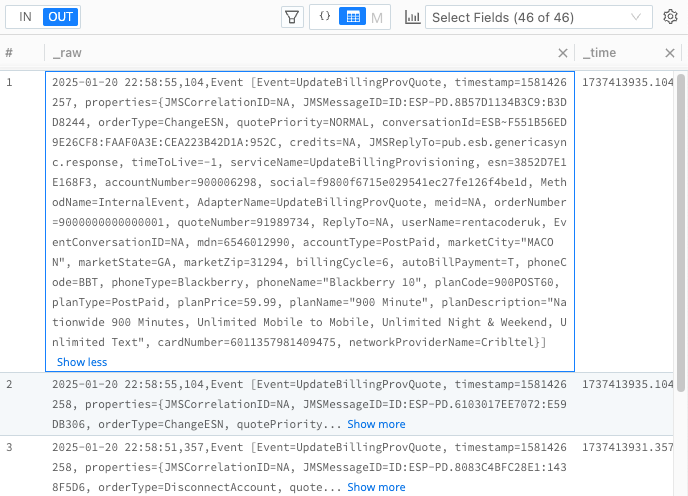

Event and Table Views

The Data Preview toolbar has several available views. Each format can be useful, depending on the type of data you are previewing.

The Event View displays data in JSON format for both the IN and OUT tabs.

The Table View in displays data as a table for both the IN and OUT tabs.

Metrics View

If your data sample contains metric events, the Data Preview automatically switches to the Metrics view. It intelligently detects the underlying data structure and switches to this view when needed to display relevant insights. This metrics-first view allows you to visualize metric events as you build your Pipeline. It allows you to analyze metrics, dimensions, time series, the number of unique values, and the percentage each metric contributes to the total time series. See Manage Metrics and High Cardinality for more information about using Metrics View.

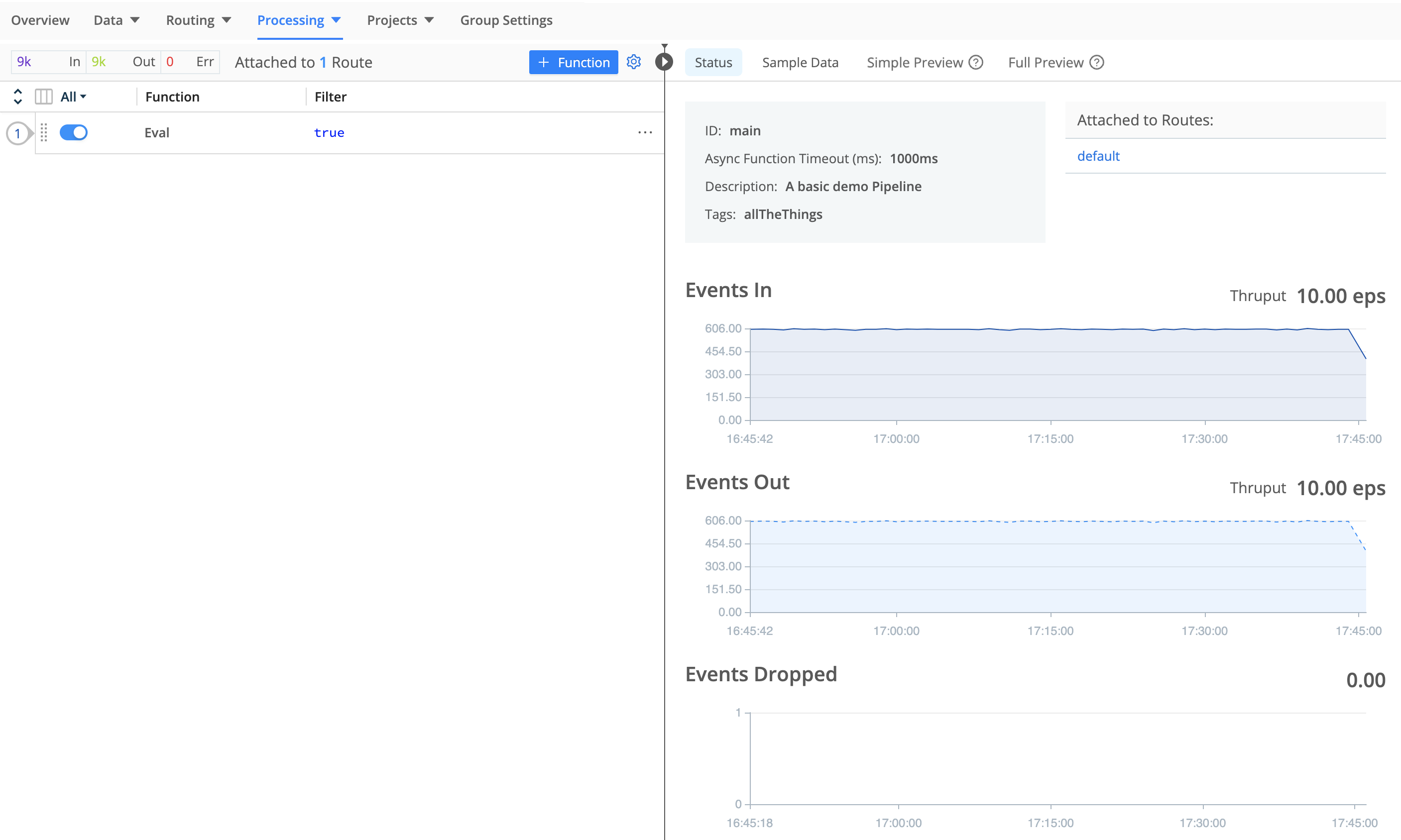

Pipeline Diagnostics and Statistics

This section explains the available tools in the Data Preview that you can use to get information about your Pipeline and Functions.

Check Pipeline Status

From the Pipelines page, select any Pipeline to display a Status tab in the Data Preview pane. Select this tab to show graphs of the Pipeline Events In, Events Out, and Events Dropped over time, along with summary throughput statistics (in events per second).

Pipeline Profiling

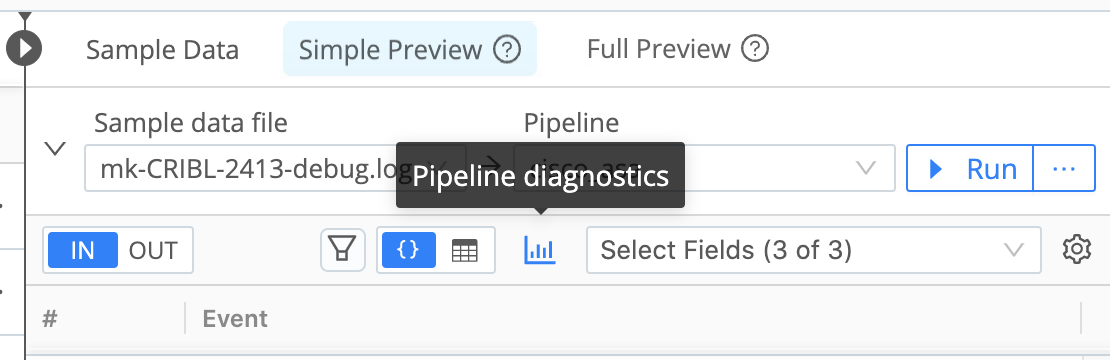

At top of the Preview pane, you can select a sample data file and Pipeline, then select the Pipeline diagnostics (bar-graph) button to access statistics on the selected Pipeline’s performance.

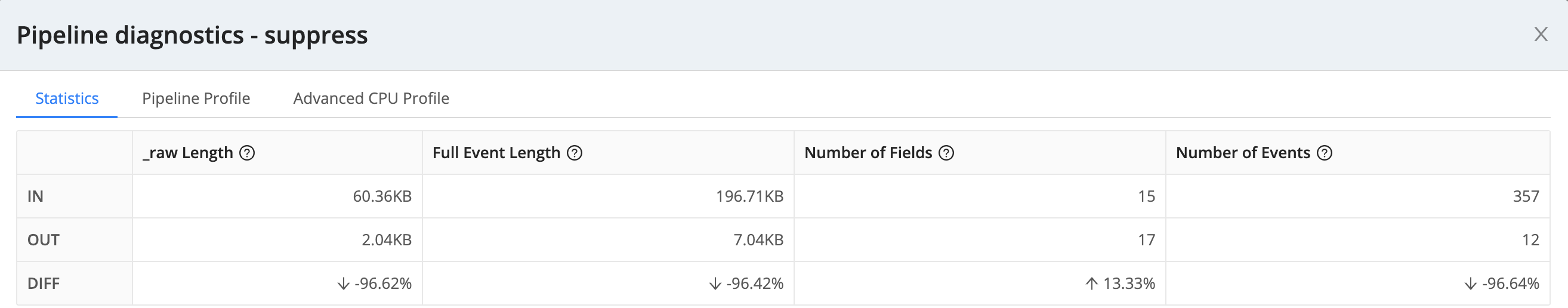

The Statistics tab in the resulting modal displays the processing impact of the Pipeline on field length and counts.

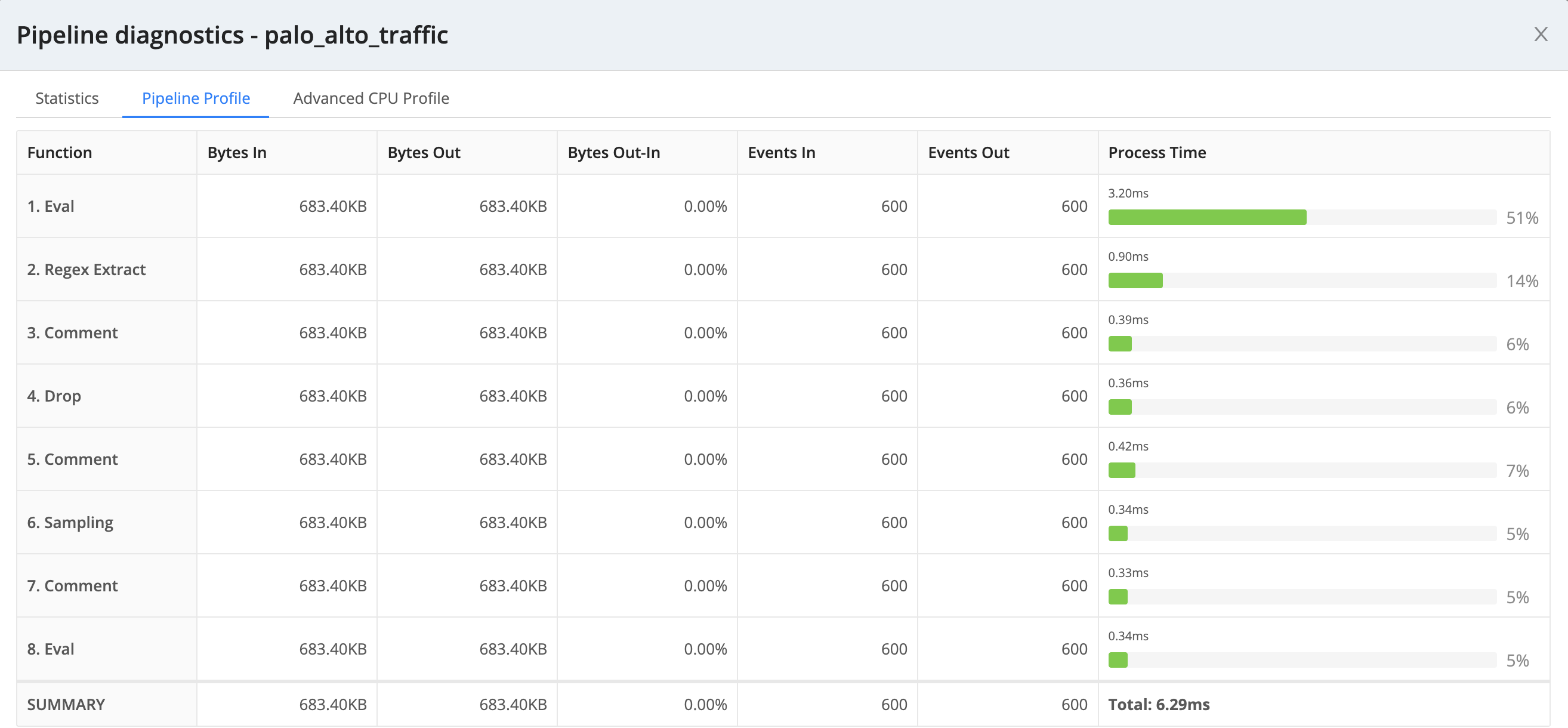

The Pipeline Profile tab, available from the Simple Preview tab, helps you debug your Pipeline by showing the contribution of individual Functions to data volume, events count, and the Pipeline processing time. Every Function has a minimum processing time, including Functions that make no changes to event data, such as the Comment Function.

When you profile a Chain Function, this tab condenses all of the chained Pipeline’s processing into a single Chain row. To see individual statistics on the aggregated Functions, profile the chained Pipeline separately.

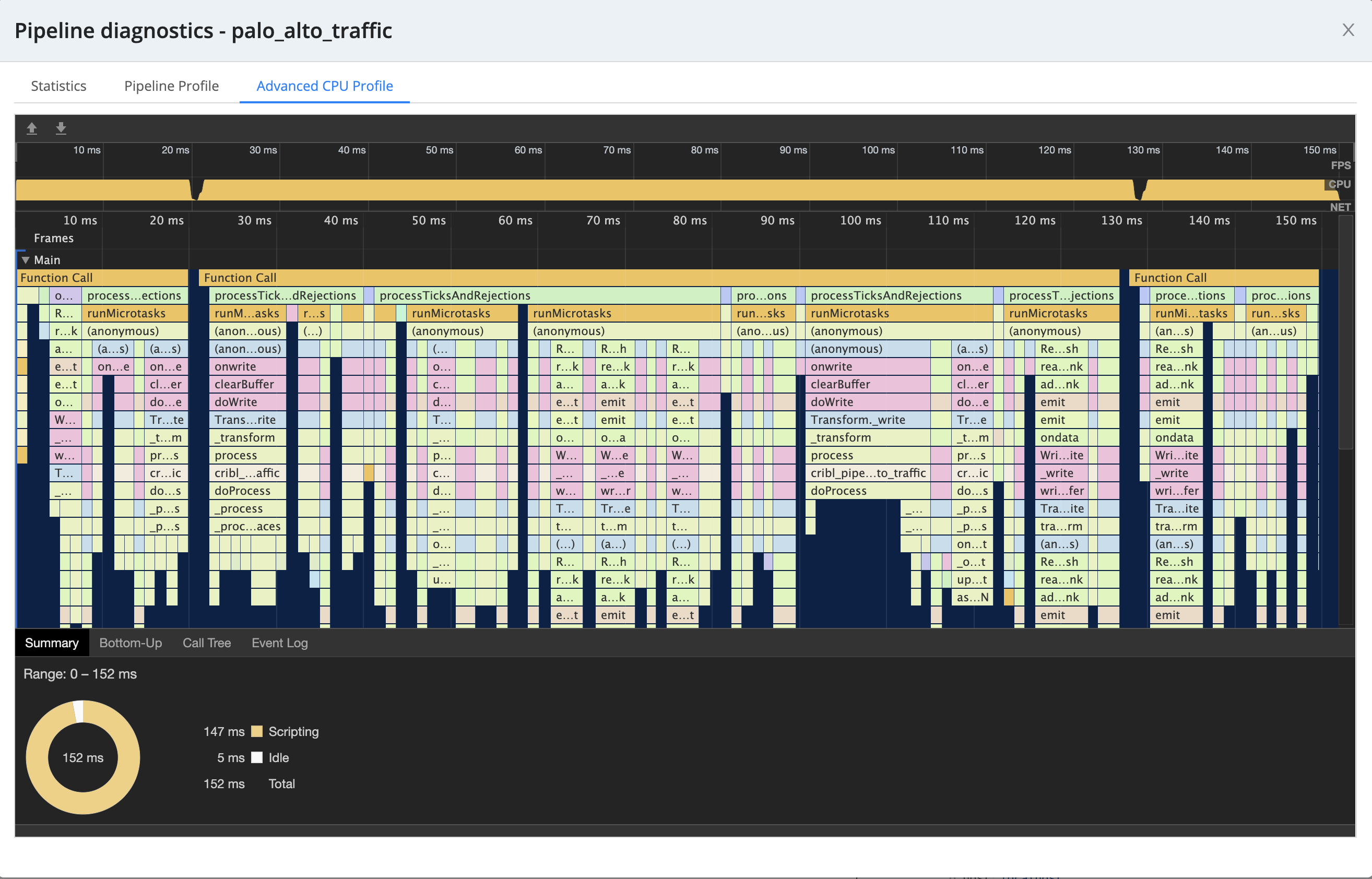

The Advanced CPU Profile tab, available from the Simple Preview tab, displays richer details on individual Function stacks.

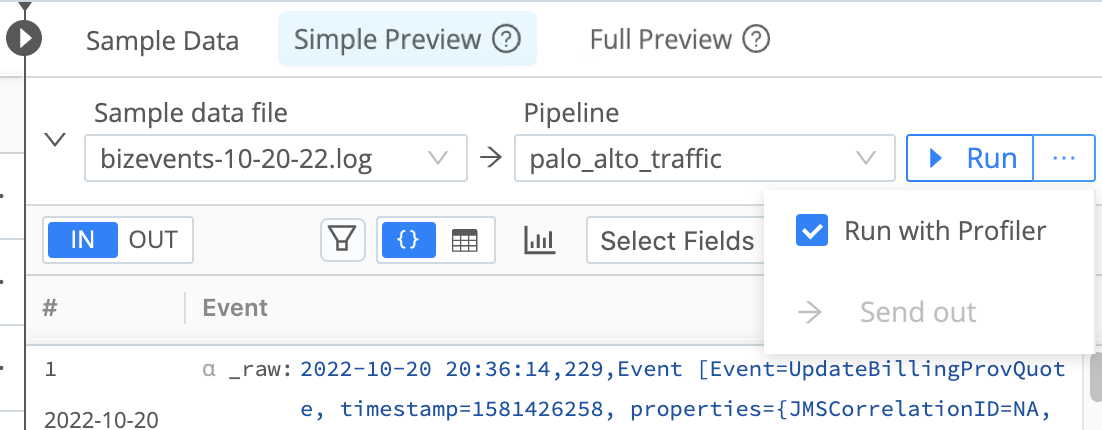

You can disable the profiling features to regain their own (minimal) resources. To disable profiling features, select the drop-down list beside the Run button and clear the Run with Profiler check box.

Advanced Settings

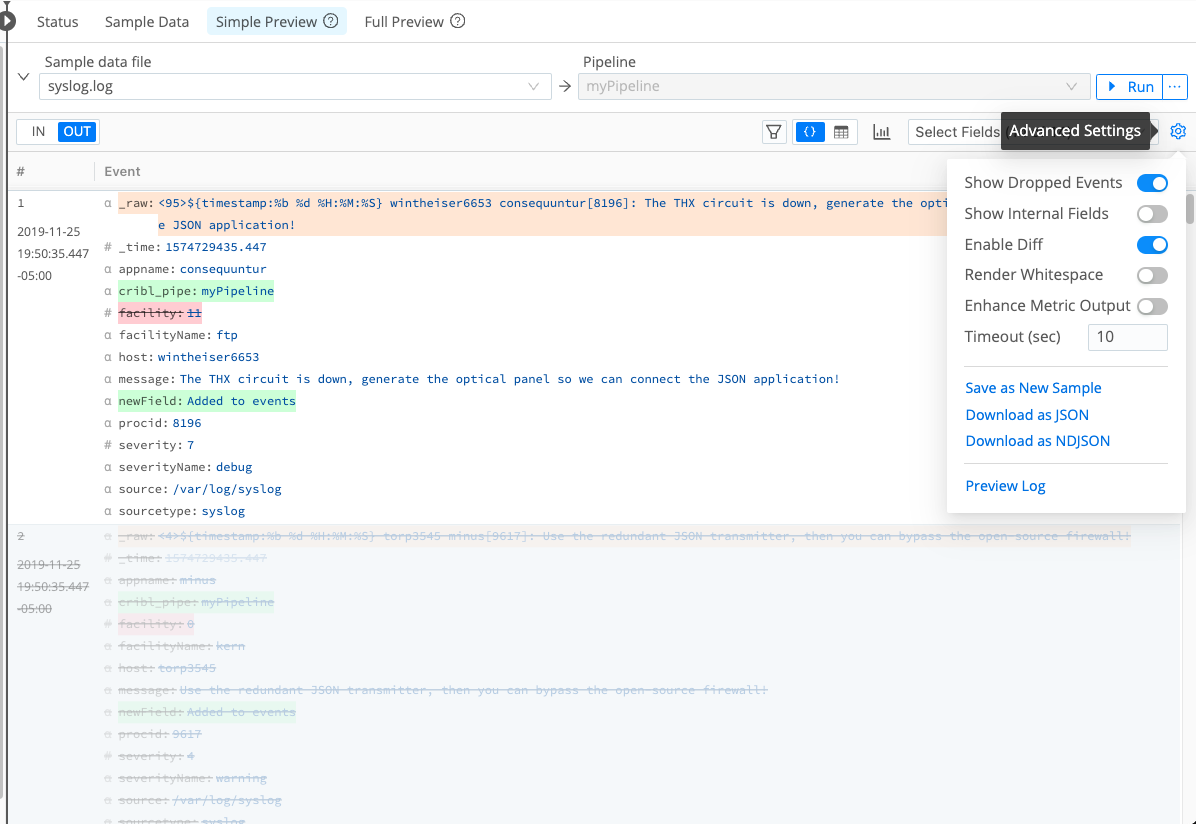

The Advanced Settings menu

includes Show Dropped Events, Show Internal Fields, and Enable Diff toggles, which control how the OUT tab displays changes to event data.

includes Show Dropped Events, Show Internal Fields, and Enable Diff toggles, which control how the OUT tab displays changes to event data.

If you enable the Show Dropped Events and Enable Diff toggles, the OUT tab displays event data as follows:

- Modified fields are highlighted in amber.

- Added fields are highlighted in green.

- Deleted fields are highlighted in red and displayed in strikethrough text.

- Dropped events are displayed in strikethrough text.

The rest of this section describes the additional fields available in Advanced Settings.

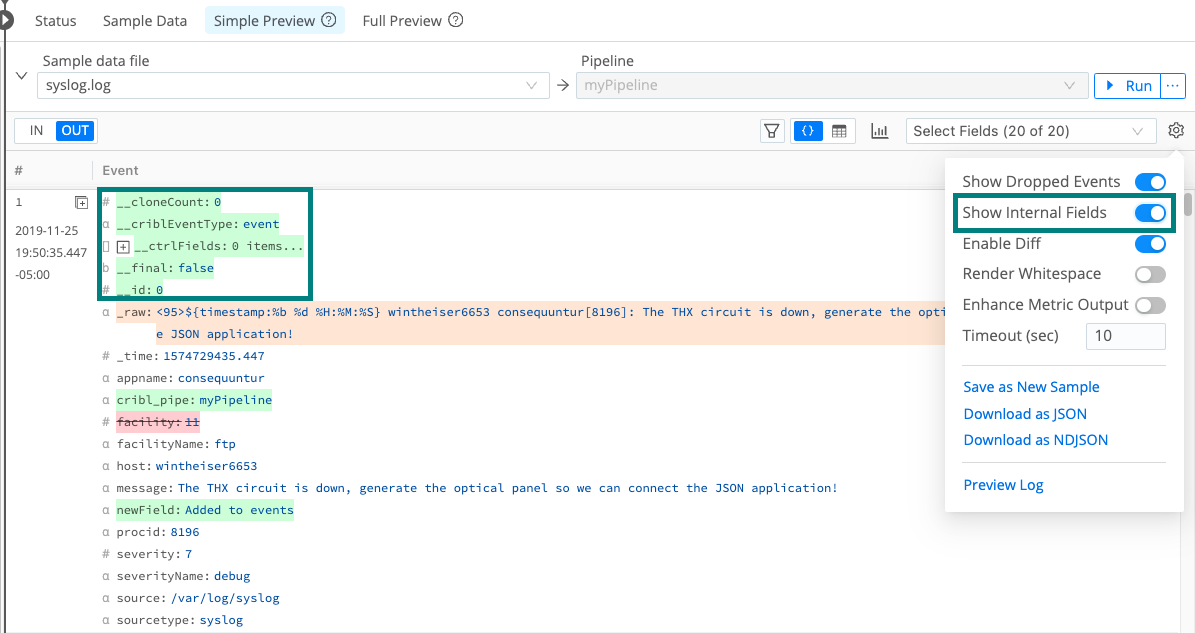

Show Internal Fields: Display fields that Cribl Stream adds to events, as well as Source-specific fields that Cribl Stream forwards from upstream senders.

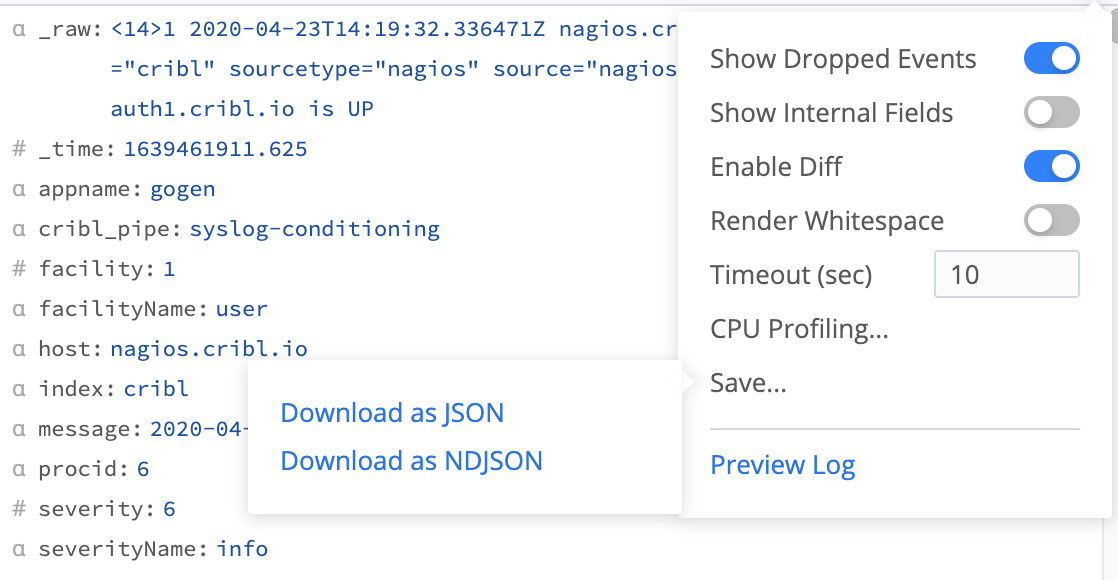

Render Whitespace: This toggles between displaying carriage returns, newlines, tabs, and spaces as white space, versus as (respectively) the symbols ␍, ↵, →, and ·.

Enhance Metric Output: Controls how the OUT tab presents the Metric name expression resulting from the Publish Metrics Function. When you disable this setting (default), Cribl Stream represents the Metric name expression as literal expression string. Enable the setting to see the expression evaluated in the OUT tab.

Timeout (Sec): If large sample files time out before they fully load, you can adjust this setting to increase the default value of 10 seconds. Cribl Stream interprets a blank field as the minimum allowed timeout value of 1 second.

Save as New Sample: Enables you to save your captured data to a file, using either the Download as JSON or the Download as NDJSON (Newline-Delimited JSON) option.

Preview Log: Opens a modal where you can preview Cribl Stream’s internal logs summarizing how this data sample was processed and captured.